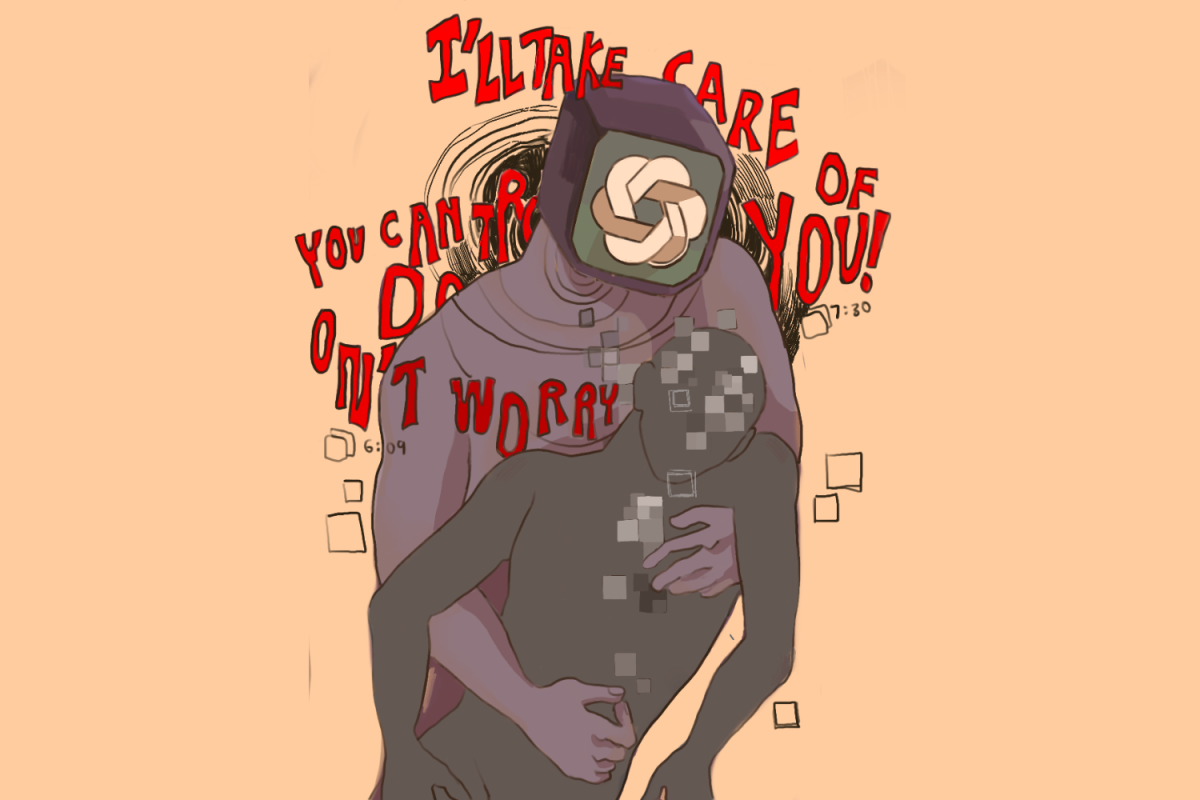

Seven million books. Eighty-one million research papers. Millions of unlicensed copyrighted works on the online shadow library Library Genesis. When The Atlantic released an article revealing an unprecedented level of piracy by artificial intelligence companies, many of whom used LibGen as a source of training data, the controversy surrounding the pirating of creative works surged in the creative community. Large corporations such as Meta and OpenAI curated copyrighted materials through potentially illegal means, enraging many of the authors and artists behind them. By pirating original work, corporations discourage artists from pursuing creativity.

In their training, AI models analyze a wide database of human-authored works, using algorithms to generate the most relevant response to a given prompt. While the training data is typically changed to formulate AI responses, authors have expressed concerns over the violation of their intellectual property — their work is used without their permission or compensation. Many have even taken their frustrations to court. In July 2023, writers Christopher Golden, Richard Kadrey and Sarah Silverman filed a lawsuit against Meta’s Large Language Model Meta AI for using their creative works as training data without permission.

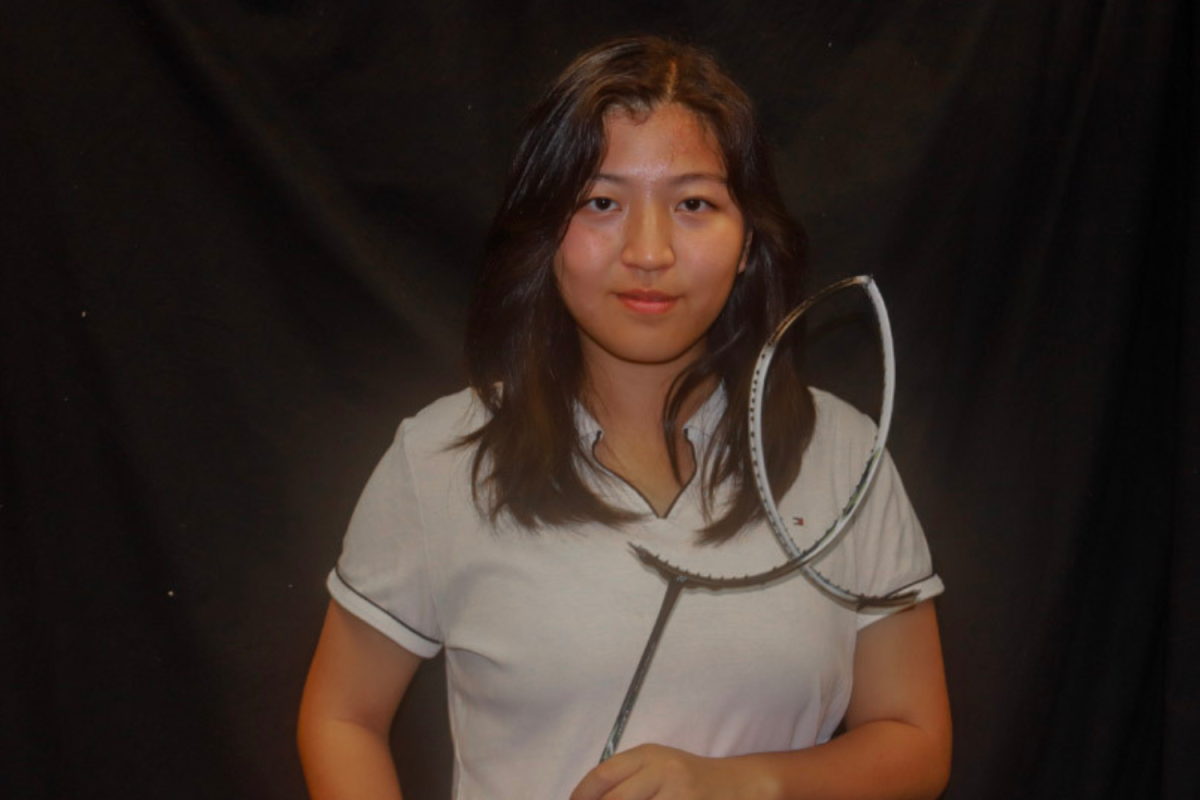

“It’s a form of plagiarism to have a product actually be the result of someone else’s hard work,” senior and Coding Arts Club president Ahana Mangla said. “AI companies that train on massive amounts of data without asking for permission from the original creators are just synthesizing human work and depositing it as their own for profit.”

Senior executives of Meta approved of acquiring these copyrighted books through shadow libraries like LibGen due to their relative cost and time efficiency as opposed to sourcing the materials through normal channels that avoid legal gray areas. Unrestricted access to shadow libraries undermines the effort that authors put into the creation of original works, negatively affecting the value of an author’s work for the large corporation’s commercial benefit.

“Large corporations feed their LLMs with whatever they find in front of paywalls, and that unfortunately includes pirated or copyright-infringed material made available without permission,” teacher librarian Amy Ashworth said. “They are taking something away from other people who may lack the power to fight against it, and that’s wrong.”

Many AI companies have pleaded “fair use” as a part of their defense, an exception to copyright laws that allows the use of copyrighted materials without explicit permission under specific circumstances. A similar copyright lawsuit was filed against Google Books by the Authors Guild in 2015, in which snippets of up to 20% of a copyrighted book were available to the public on Google Books without the author’s permission. Google Books won the suit as the use of these materials was deemed transformative, educational and did not compete with the original work’s market. Given that an AI model modifies the original material more than Google Books, its use of unlicensed copyrighted materials for training could count as fair use under current guidelines.

“Copyright law may permit the indexing of third-party works into a generative AI model, at which point it doesn’t really matter how those works were acquired,” said Eric Goldman, Santa Clara University School of Law professor, Associate Dean for Research and Co-Director of the High Tech Law Institute.

However, even if these large AI corporations’ large-scale piracy were considered fair use, using shadow library websites as a source of AI training data is an unethical shortcut compared to purchasing the works from their owners. While tech companies argue that the competitive and ever-advancing field of AI requires large, high-quality databases, it is important to respect and credit the work of others. United States copyright laws aim to protect a creator’s exclusive rights to their original work to ensure they are well recognized, creating an incentive for further innovation and creativity.

Billion-dollar companies not putting in the effort to establish deals with authors or purchasing the copyrighted works sets a problematic precedent for piracy. Lawsuits like Kadrey v. Meta have resulted in public backlash from authors against piracy as a whole. Some students at Lynbrook use pirating websites to access books, movies and TV shows for personal enjoyment, but even this harms the industry of creative humanities on a smaller scale.

“The little infractions here and there — it’s a slippery slope,” Ashworth said. “Something that feels okay to do now in high school could lead you to potentially work for a company where there’s a little bit more gray area around a larger scale of piracy.”

Meta isn’t the only offender; OpenAI and Microsoft are currently facing a lawsuit filed by the New York Times newspaper, arguing that these AI companies violated the Digital Millennium Copyright Act by circumventing technological measures that protect copyrighted materials, including paywalls and subscriptions. Making the high-quality journalistic information of the New York Times available to non-subscribers devalues and disrespects the time, effort and resources that are necessary to put together a cohesive publication. Without subscriptions, the resources and outreach of the publication would be severely limited, resulting in lower-quality reporting. This lower-quality reporting would then be fed into AI models, decreasing the quality of the AI-generated responses as well.

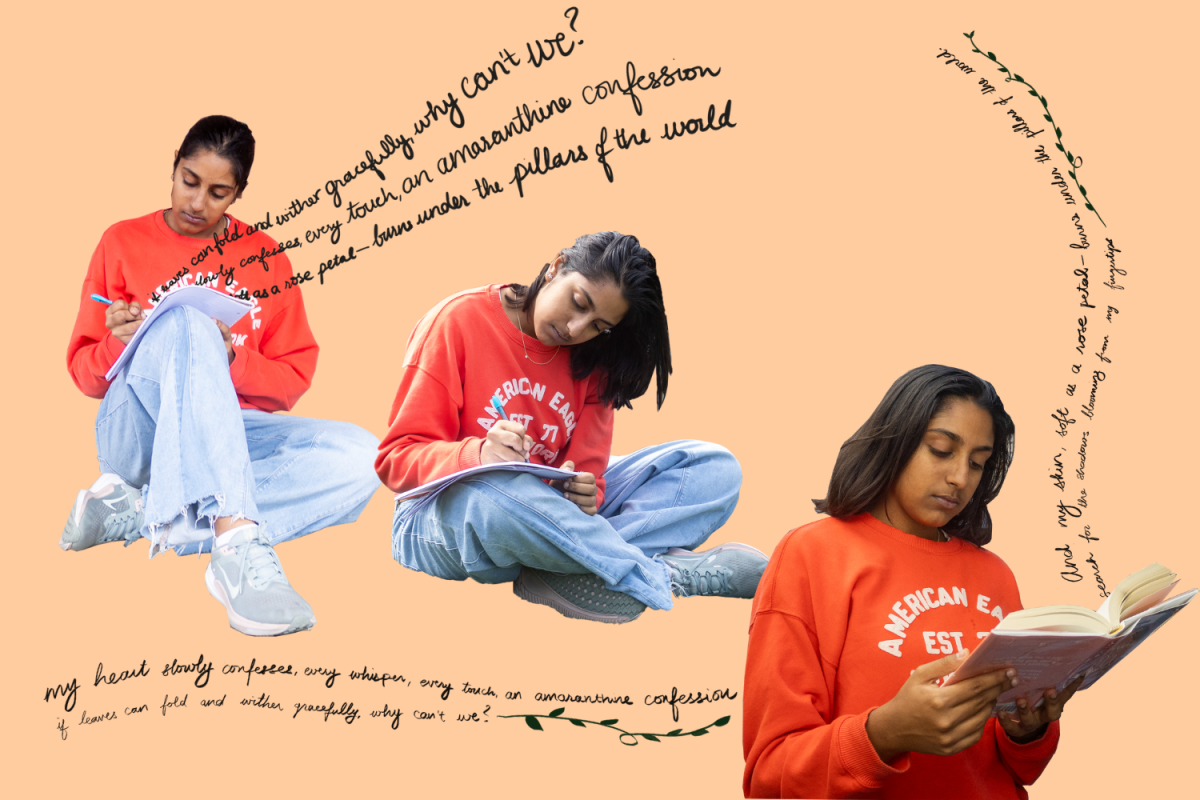

Future legal restrictions could also impede the development of AI. Authors could be unwilling to allow AI to access their works at any price, which would result in a smaller database of copyrighted works and thus a lower quality of AI. This trade-off illustrates the balance between the advancement of technology and protecting human-authored works. As much as the development of AI increases efficiency, protecting creative rights and giving credit for sources is much more important, giving creators the recognition and compensation they deserve. As verdicts for copyright law court cases come out, they will settle the score between advancing technology and preserving the livelihood of authors and creators.

“It’s important to recognize how vulnerable generative AI models are to legal risk,” Goldman said. “Be thoughtful about the possibility that generative AI models could be scarce or potentially legally banned, and that would be a very different world than the world we’re contemplating for your future.”