In a controversial move, Meta announced in a blog post on Feb. 9 that they would implement a feature limiting the amount of recommended political content to users worldwide on Facebook, Instagram and Threads. While the new feature aims to curb the amount of misinformation and biased content online, its detrimental effects on users far outweigh any positive intentions.

The feature will continue to show political content from users’ followed accounts, but will stop proactively recommending political content in places such as Explore and In-Feed in Instagram as well as Feed and Pages You May Like in Facebook. While the feature is enabled by default, it can easily be turned off in users’ settings. Unfortunately, this move by Meta brings many impracticalities and is far from achieving its intended purpose.

In the blog post announcing the change, Meta defined political content as “potentially related to things like laws, elections or social topics.” This vague and imprecise language makes it difficult for users to know whether the content they post will be flagged, and allows Meta to algorithmically promote content which they find more friendly to advertisers, in the place of content perceived as overly political. Ultimately, the ambiguity of the feature threatens the transparency of social media platforms because it is impossible for users to know what specific content is being limited to them and why it is defined by Meta as “political content.”

“Because Meta defines what information can be considered political, they could in theory exclude posts about Trump from being defined as political,” senior Sandhana Siva said. “Additionally, Meta conducts a lot of lobbying in legislatures across the country, so online content will continue to promote whoever they support.”

The lack of transparency from Meta in implementing this feature is harmful and unhelpful to their goal of combating the spread of misinformation. While Meta does offer users the choice to opt out of using the feature, their decision to announce the feature in a relatively obscure blog post meant that not many would be aware of it. Their actions intentionally created an environment where fewer people would be able to make an informed decision on whether to disable the feature or not. Instead, they should have alerted all users about the new implementation with a notification.

“Transparency is very important in content moderation,” said Étienne Brown, an assistant professor in the Department of Philosophy at San Jose State University. “Some users pay close attention to what’s happening in the tech world, while most will think that nothing has changed without clear updates.”

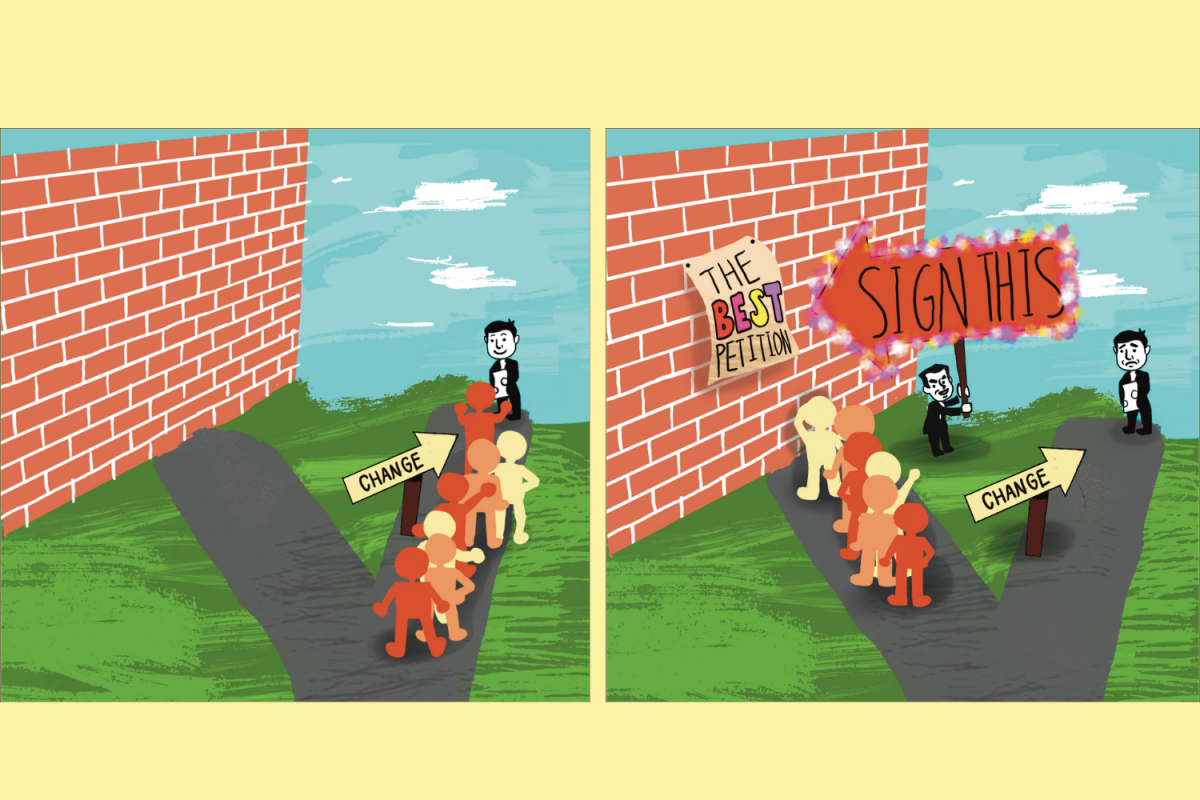

Limiting recommended political content and only showing content from followed accounts to users exacerbates confirmation bias, which occurs when users are exposed only to information that supports their preconceived views and beliefs. This practice therefore amplifies misinformation instead of limiting it. Since users can only see content from accounts they follow, they tend to encounter information that reinforces their pre-existing beliefs, leading to greater political polarization and making it harder for users to form a more educated perspective on issues. This leaves more users susceptible to believing false or misleading information.

“The feature creates an echo chamber where you hear what you want to hear,” Brown said. “You’re not going to be exposed to a diversity of perspectives.”

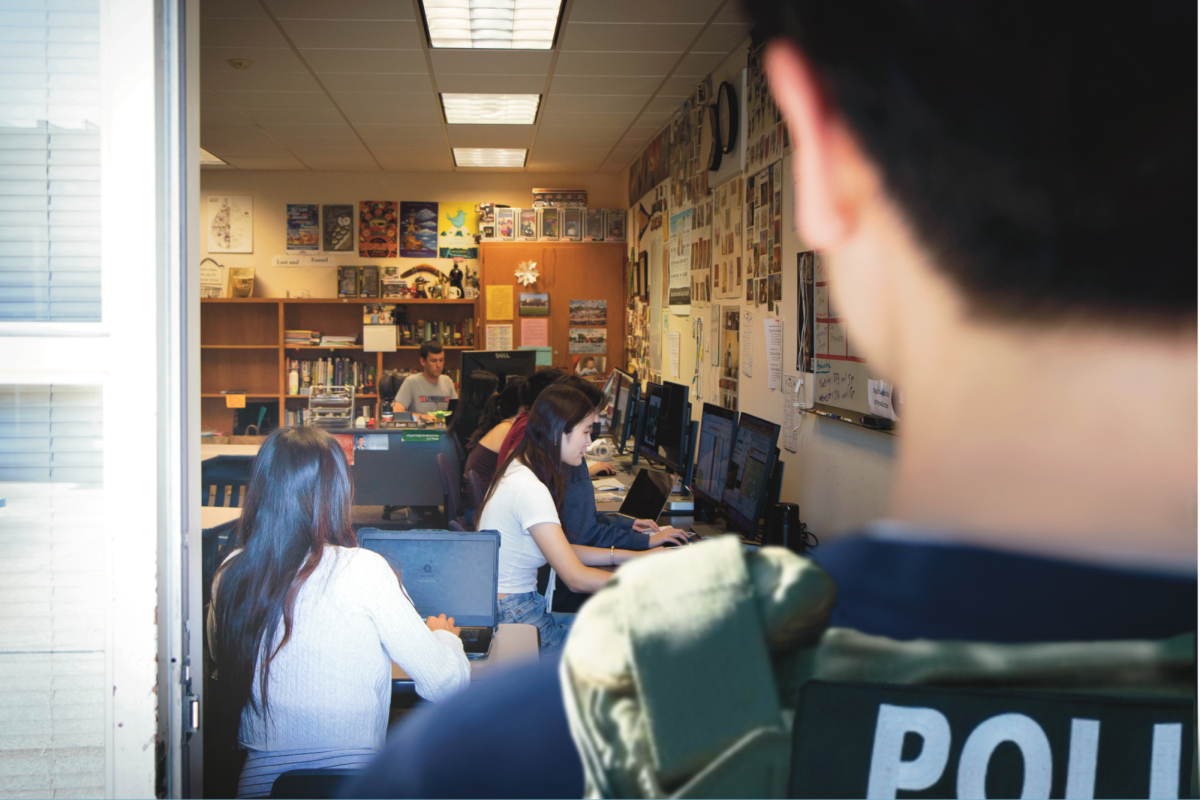

Meta’s other efforts to limit the spread of misinformation are commendable, and other social media giants should follow in their footsteps. However, the decision to remove recommended political content from feeds altogether has a net negative impact on how users end up interacting on Meta’s platforms. Instead, Meta should invest more heavily into content moderation efforts by hiring more workers to be part of its content review teams so that workers can identify and take down misinformation as accurately as possible. The company currently employs people full-time to be a part of its content review teams, which detects, reviews and removes violating content. Unfortunately, Meta has a history of facilitating poor working conditions for its content moderators. Thus, in addition to investing more into content moderation, the company should also provide support for the mental health of their content moderation workers and create better working conditions for them.

“There are laws against false advertising in the United States, which are used to ensure that people can make good decisions on what to buy,” Brown said. “Similar logic can be applied to social media to protect users online.”

While Meta’s feature to limit recommended political content has good intentions, it is largely unproductive and even harmful to users. The wide range of problems caused by the feature demonstrate the importance for social media giants to prioritize transparency and establish clear guidelines. Political content on social media is not always misinformation, and given the upcoming election year, political content can be very beneficial for users to stay updated with politics and election outcomes. Many newspapers also use social media to give updates about current events and new articles, which is why many people have easy access to news through social media. Nonetheless, there is always going to be some level of misinformation circulating on social media. Social media users should fact check the information they receive to ensure sources are reliable and also do their own research on the topic so they are not misinformed.

“Social media companies have to be extra careful when they’re censoring political speech,” Brown said. “Ideally, social media should be a place where political content circulates freely, while misinformation and hate speech are moderated with transparency.”