Behind SF police’s controversial killer robots

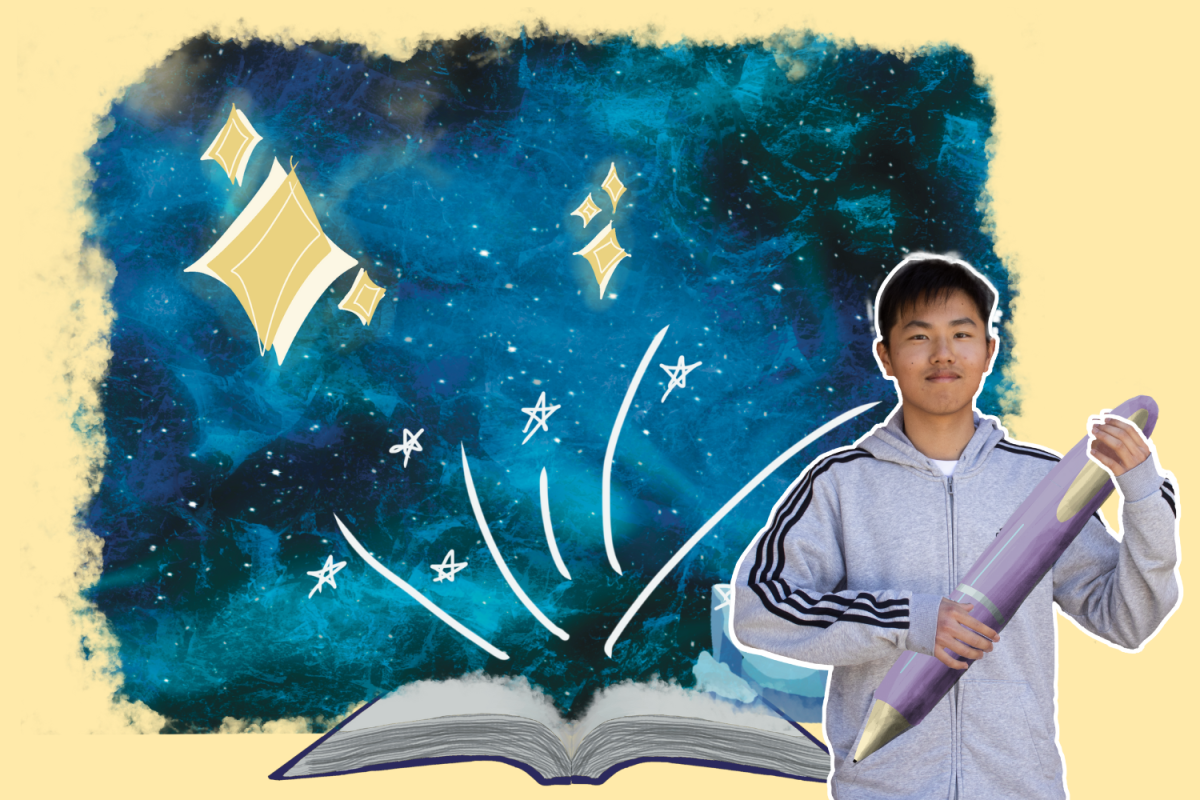

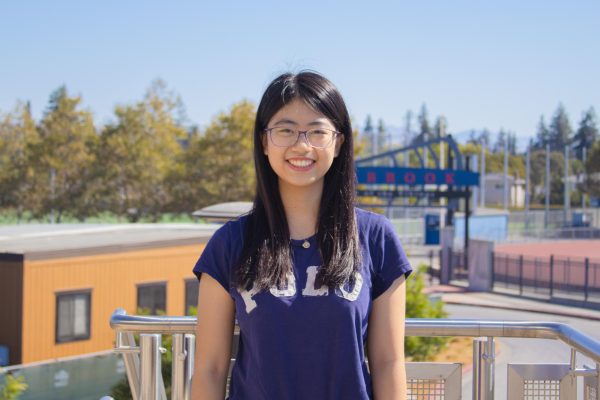

Graphic illustartion by Valerie Shu and Apurva Krishnamurthy

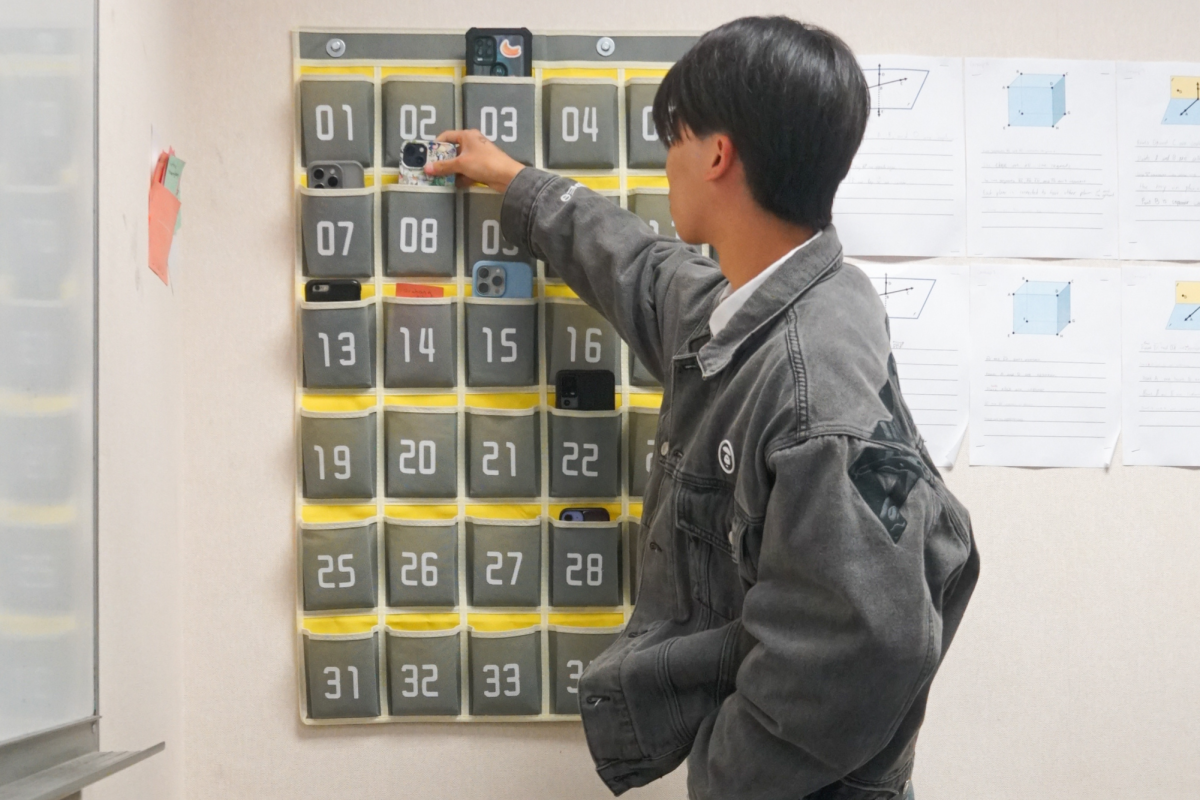

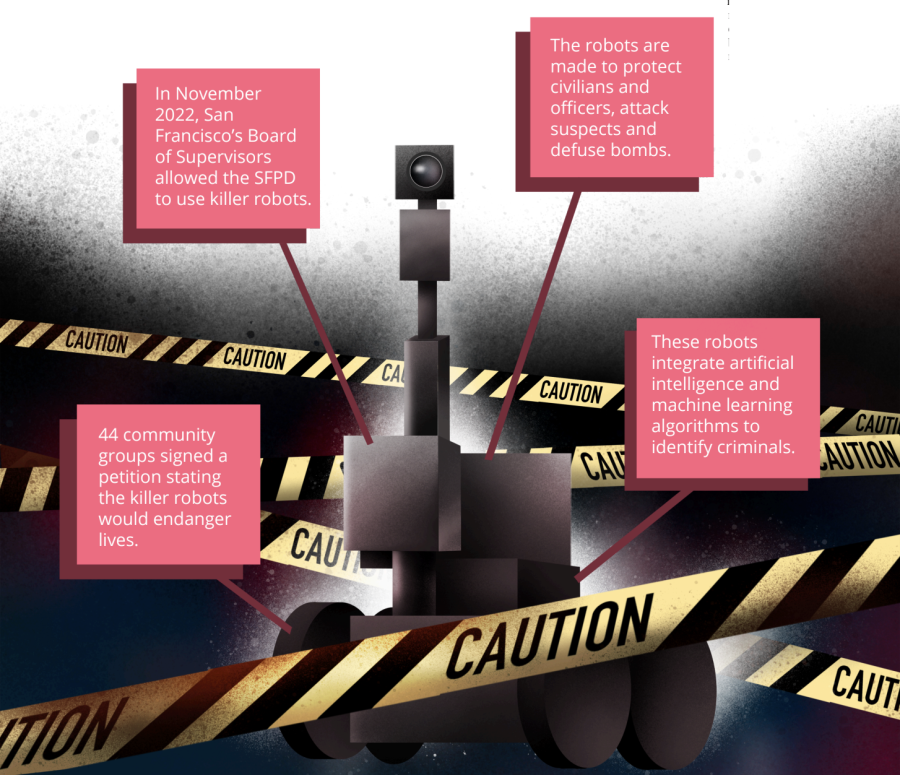

In November 2022, San Francisco’s Board of Supervisors initially approved the San Francisco Police Department’s proposal to use robots that integrate artificial intelligence and machine learning algorithms to identify criminals.

March 10, 2023

In November 2022, San Francisco’s Board of Supervisors initially approved the San Francisco Police Department’s proposal on giving local police rights to kill criminal suspects using lethal autonomous weapons systems (LAWS) or “killer robots.” Although the equipment policy proposal was eventually reversed and sent back to its committee for further discussion, it was only a temporary reprieve, as the SFPD may revise and resubmit its proposal.

These robots integrate artificial intelligence and machine learning algorithms to identify criminals. Currently, some robots in the police force have dual capabilities: to protect civilians and officers or attack suspects. Most of the robots are used for defusing bombs or dealing with hazardous substances. While they can be modified to carry lethal weapons, the changes have not yet been made.

Potential concerns with the use of LAWS continue to arise, even though they may place officers in a safer position. These concerns include the liability of lethal action with autonomous weapons. It isn’t probable for a robot to take responsibility, and manufacturers are exempt from the duty of caring toward those the robot would be used against, according to exceptions under the Federal Torts Claims Act. Unlike regular citizens, police officers also receive qualified immunity, which protects them from being prosecuted in many instances should it be deemed that they acted in self-defense. Changes have been made to hold more officers responsible for their actions, yet officers still get a lot of flexibility due to the nature of their job. Supervisors are also not typically criminally liable for their subordinates’ actions.

LAWS are designed to independently identify the target and attack them based on its computer algorithm and collected data from its surroundings, without any human operator. With LAWS, there’s a risk that the information being fed into the system isn’t completely unbiased. Consequently, there will be situations where the robot’s actions may not be foreseeable.

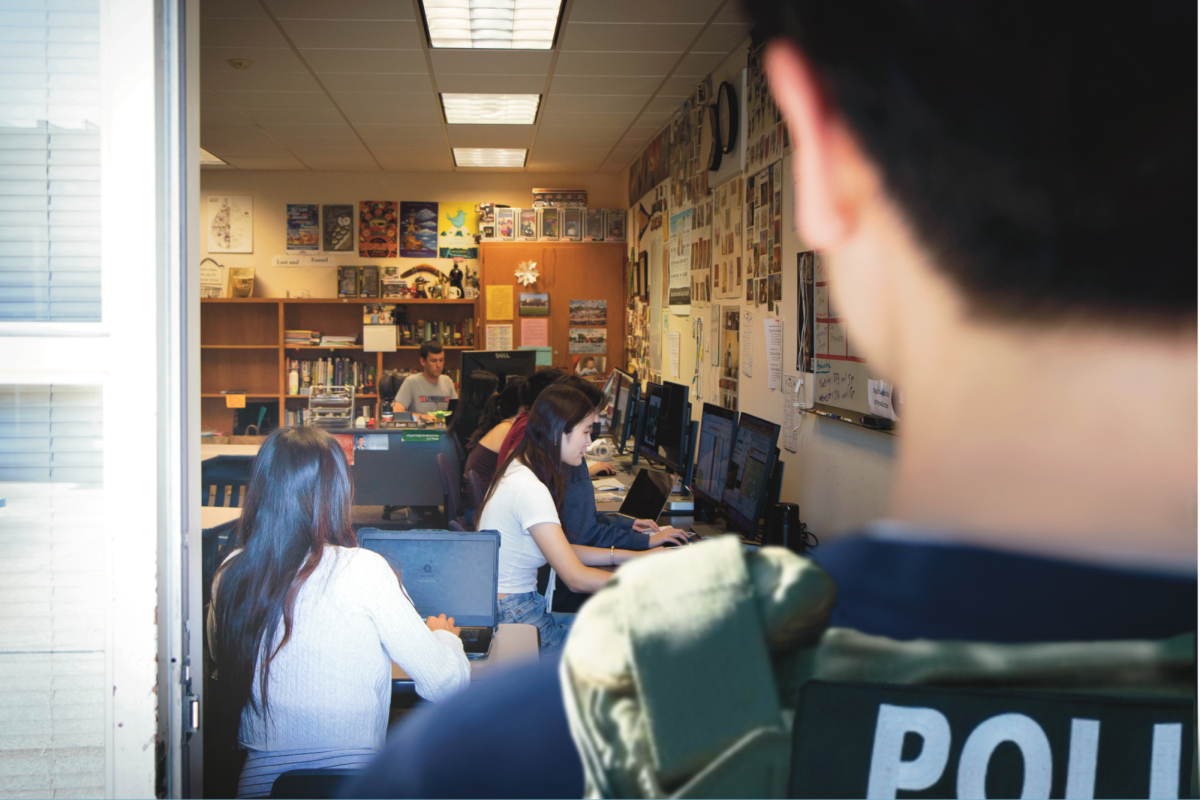

“There are still a lot of concerns with robots in terms of what type of information it collects and how it uses them,” Forensic Science Club President Thomas Zheng said. “All forms of technology or robots can be potentially hacked or malfunction which can lead to unintended consequences.”

However, the benefits of robots in policing are also worth noting as demonstrated when police dealt with a bomb threat in Austin, Texas in 2018. After suspicious packages with bombs inside were delivered to Austin residents, the police used robots to further investigate inside the facility. The robots could get the tracking number of the package by using their cameras and manipulator arms, identifying the suspect with this information. Later on, a Squat, a steel robot, was also used to search the suspect’s house in Austin to make sure it was safe for human investigators to go inside.

In Dallas, killer robots are already actively involved in policing. In 2016, the Dallas Police Department used a Remotec robot armed with an explosive to take out an enemy sniper who had killed five officers and injured seven more. It was considered as the safest alternative at the time to eliminate the suspect.

“If you are using an autonomous killer robot, in what situation is it acceptable to kill someone?” Computer Science Club President and senior Ryan Chen said. “Even with remote-controlled robots, if police officers are able to harm people from behind a screen, they might become disassociated with what they’re doing, which makes it easier for them to abuse the technology and over-police.”

Deescalation is an essential part of a police officer’s role, and there are many ways in which they process hidden body language from the suspect that machines may not be able to notice. Under the International Covenant on Civil and Political Rights, “every human being has the inherent right to life,” but with the lack of deescalation opportunity, it is unclear whether suspects will be given a last-minute chance to surrender.

Perhaps with the improvement of AI, there is a possibility machines would be able to take on the role of officers in dangerous situations, but currently, they lack fundamental “human” qualities that concern critics of LAWS.

“As machines, fully autonomous weapons cannot comprehend or respect the inherent dignity of human beings,”said Professor Bonnie Docherty from the Harvard Law School International Human Rights Clinic. “The inability to uphold this underlying principle of human rights raises serious moral questions about the prospect of allowing a robot to take a human life.”

Taking a closer look at the Bay Area, the San Francisco Board of Supervisors initially allowed the SFPD to use teleoperated robots to arrest a criminal suspect if risk of loss of life to members of the public or officers is imminent and outweighs any other force options available. As this policy was expected to prevent atrocities and a mass shooting in which automatic rifles are involved, the San Francisco Supervisors voted eight to three in favor of the plan.

“Robots help reduce the risk of injury to police officers or individuals in the Criminal Defense Department field,” Zheng said.

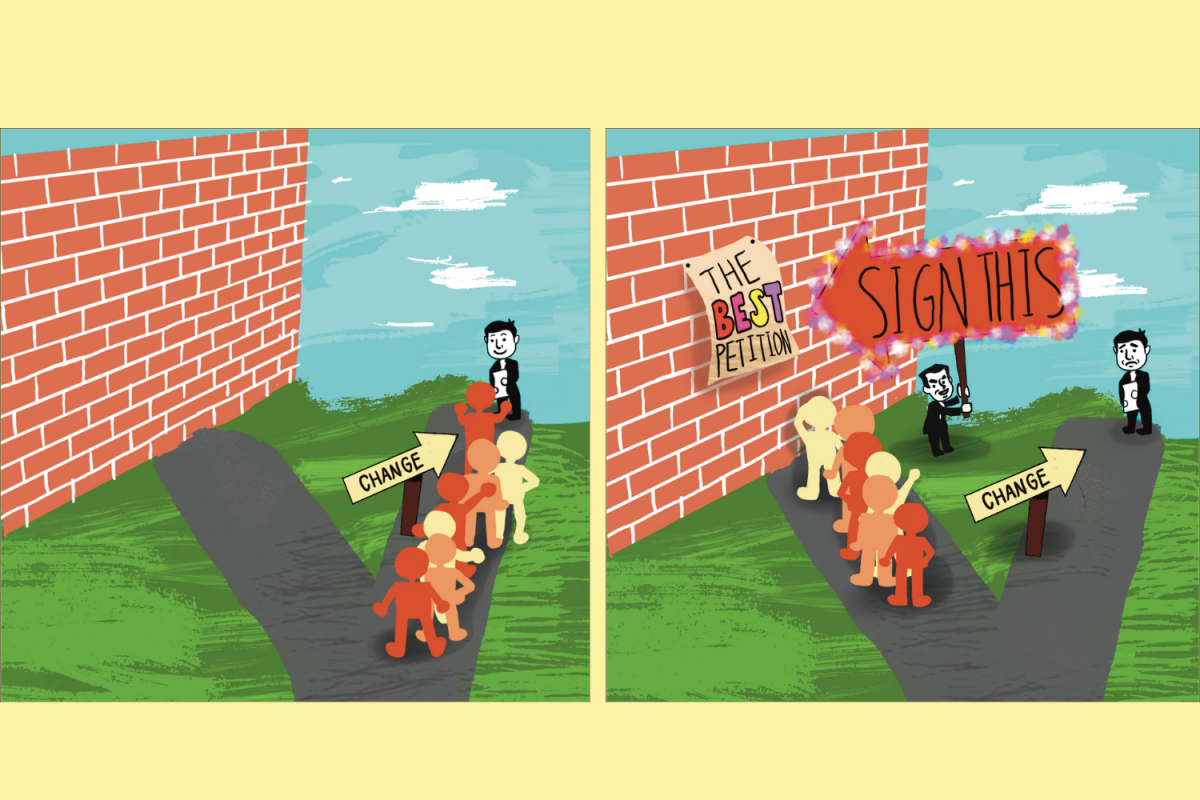

However, since the SFPD argued to use robots that were developed to disarm bombs in war zones, concerns of this policy were raised by many non-profit organizations and civil activists who claimed that this policy encourages the militarization of the police force. Forty-four community groups signed a protest letter which stated that the policy would needlessly endanger lives and make the public feel uncomfortable under any circumstances. With active protests, the board, with a vote of eight to three, banned the use of killer robots on Dec. 7, 2022, sending the policy back to its Rules Committee for further discussion.

The San Francisco city government has always worried about the abuse of technology in policing since 2019. The San Francisco Board of Supervisors passed the “Stop Secret Surveillance Ordinance” on May 14, 2019, which regulates the use of surveillance technology by the city departments’ law enforcement agencies. They believed that this policy would address the public’s concern about privacy related to questions like how long data is stored and prevent any racial discrimination that can be caused by the technology.

“Studies show that when new technology comes around, everybody initially goes through a fear and distrust phase and it’s a natural part of accepting new things,” computer science teacher Bradley Fulk said. “Whether that technology goes away or not depends on how effective it is. However, I still do not trust a robot’s ability to make important decisions relating to life and death based on artificial intelligence and machine learning, at least yet.“