Isolated in online social spaces: The filter bubble algorithm

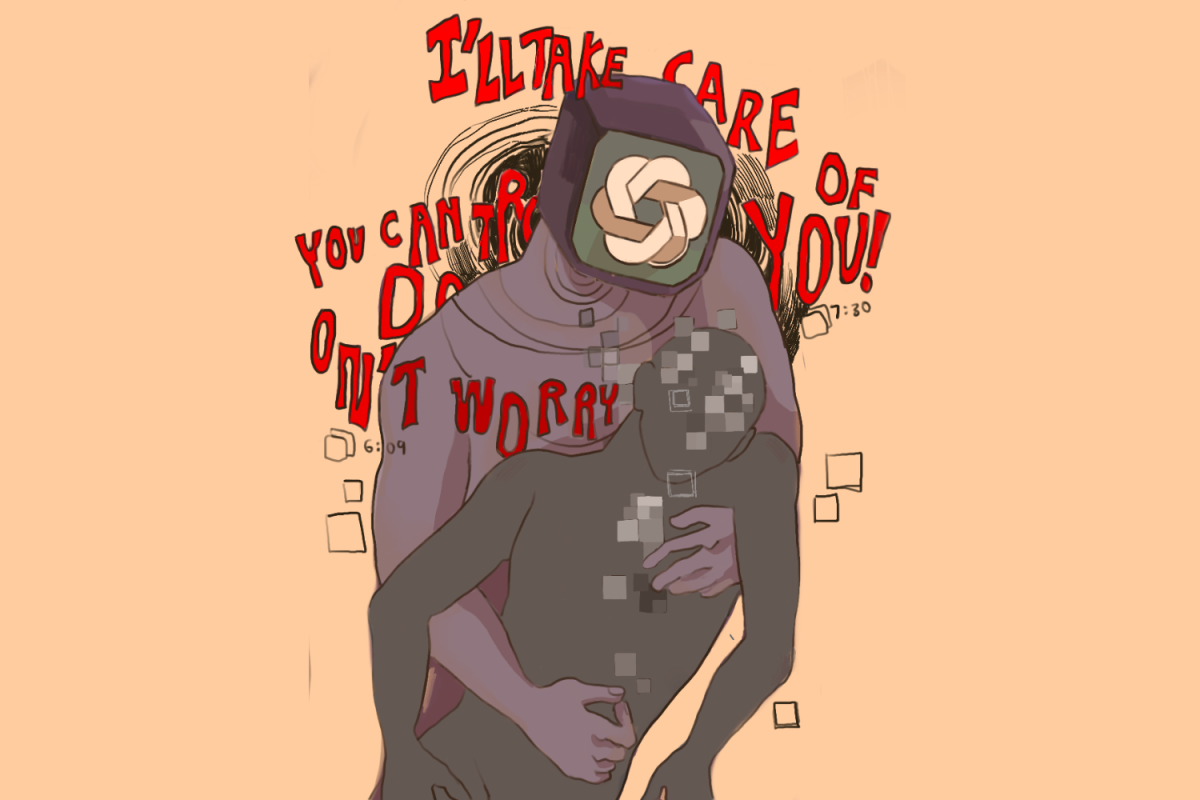

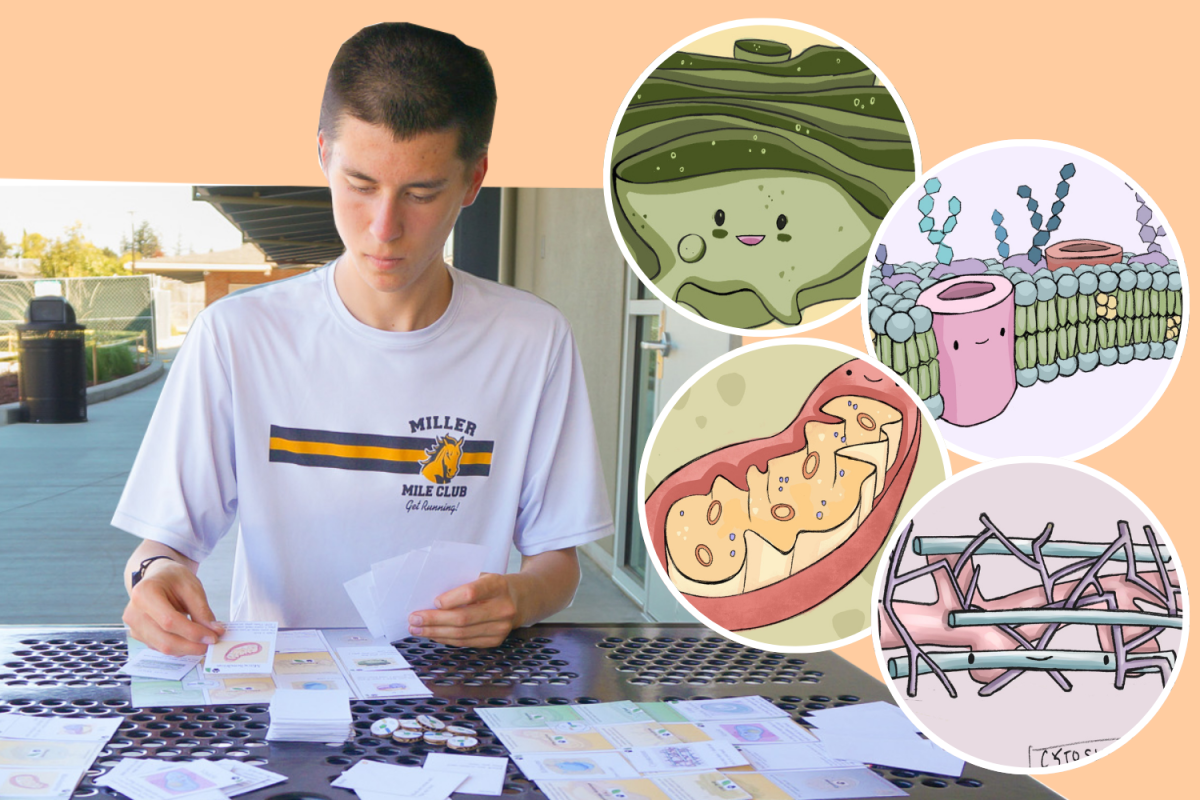

Graphic illustration by Alara Dasdan

While it may be uncomfortable, interacting with dissenting views is necessary for personal growth and a functional society. It’s important, if not required, for our future to actively avoid falling into the filter bubble cycle.

February 19, 2021

A regular social media user scrolls through their Instagram feed, engrossed in the routine sight of Kendall Jenner’s newest ab routine, recipes for the pasta they tried just yesterday and rants about the latest casualties of capitalism, the user hardly realizes that they’re trapped in the algorithm used by corporations to increase screen time and decrease exposure to new ideas and opinions. Social media algorithms curate users’ feeds to feature only topics they want to see, whether those be hobbies, news or celebrity gossip. They purge anything uninteresting, especially differing political or ideological views, therefore worsening political discourse and enforcing one-sided opinions of social issues. Predominantly unknown to the users, large companies like Google, Facebook and TikTok limit their perspectives through customized feeds.

A filter bubble, or a “personal universe of information,” as described by internet activist Eli Pariser, is created when social media algorithms filter posts to show users what they want to see. Based on stored data about creators that users follow, block and like the posts of, companies weed out anything that does not fit a user’s specific profile. From the minute a user enters their name, algorithms are already personalizing their feed. Filter bubbles are restrictive by design. The more time a user spends on an app, the more the algorithm reinforces the strength of their bubble.

“Unfortunately, models of companies such as Google are designed so that they basically collect the information of what you’re interested in, and then they spread it to others.” said Ruzena Bacsjy, professor of EECS and Director Emerita of the Center for Information Technology Research in the Interest of Society at UC Berkeley.

Tech companies utilize these algorithms to maximize watchtime and engagement with their services, which in turn increases advertising revenue. In time, the companies filter the users’ interaction with the content creating an endless cycle, often leaving users in the dark about social or political issues outside of their sphere.

“If algorithms are going to curate the world for us, then we need to make sure they’re not just keyed to relevance,” Pariser said in a 2011 Ted Talk. “We need to make sure they show us things that are uncomfortable or challenging or important—other points of view.”

Without a diversified perspective, it becomes difficult to understand, or even know about, opposing ideas, an essential factor for healthy discourse. The effects of this are evident in current social media fights, which are more often about attacking the other party as a person rather than debating their beliefs.

“We live in a global geopolitical environment, and we have to have inclusiveness of conversation on all topics.” said Dr. Catherine Firpo, professor of psychology at De Anza Community College.

Continuing to view content that reinforces an individual’s existing opinion is pleasing to the ego and reinforces the filter bubble. The result is a psychological phenomenon called confirmation bias, which describes how individuals seek out new or perceive existing information that fits their agenda, feeding on the human need for validation and comfort.

An example described in Neil Degrasse Tyson’s MasterClass illustrates the reality of confirmation bias. In an unnamed experiment, the conductor first took aside a group of people, all of whom had some faith in astrology. The best written horoscope will fit any person, and reading a random one aloud will spark a reaction. The conductor read aloud a random horoscope and asked the group whether or not they believed it applied to their sign. The study found that the horoscope did not fit the majority of the group who were sure it was their horoscope.

The vague, broad nature of horoscopes is what makes this experiment successful. The human brain only picks up on the elements that match existing opinions and information, overlooking anything that doesn’t fit in. When the brain already filters out anything irrelevant or different, computer algorithms that are designed to go through the same process skyrocket confirmation bias.

“Search engines on the internet are the epitome of confirmation bias,” Tyson said in his Masterclass. “There is no greater force in the universe than an internet search.”

So why doesn’t the brain realize it’s being trapped? When a person feels undoubtedly right or supported in their beliefs, a great sense of pleasure is released that makes them yearn for more, thus forming a habitual loop. But this pleasure is temporary, and in the long run, decreases tolerance towards other viewpoints. To fully understand a conflict or policy, an interest and access to all viewpoints is crucial, which is what filter bubbles inhibit. Individuals create a false reality in the world of social media in which everyone has identical political views, pop culture opinions and even fashion tastes.

One of the most clear examples of how the filter bubble algorithm groups and contorts our impressions is illustrated on TikTok. This platform is a hub for many teenagers with varied interests and opinions where complex algorithm curators have managed to group users with similar interests and content history into a “side” of TikTok.

This phenomenon can set individuals up for a huge shock when they go out into the non-virtual world, where they can’t filter out others and are hit with the realization that people have a wide range of differing interests and beliefs. Perceiving individuality as a negative trait can be damaging to the world on a larger scale, affecting systems of politics as well as the individual. This way of thinking can lead to active avoidance of and hostility toward dissenting opinions, already evident in national politics.

“I think it’s imperative that we be able to look at all sides, that’s what democracy really is. It’s the freedom to respectful dialogue and you and I know that the word ‘respectful’ is a little bit challenging these days,” Firpo said.

In the aftermath of a particularly divisive election, the Capitol riots, and Joe Biden’s recent inauguration, America is in a state of extreme political polarization. Statistics displayed by Pew Research Center emphasized the extremity of this election, showing that about nine in ten voters believed that if their chosen candidate didn’t win, lasting damage could be done to the U.S.. Since filter bubbles on social media are notorious for displaying information that the user has already shown prior interest in, they are also only shown content that matches their own political opinions. As a result, the polarization between both parties increased as voters from each party were pushed to extreme ends of the spectrum.

Recently, American voters’ incredibly low tolerance against opposing ideologies was showcased during the 2020 election season. Fox News has been a mainstream news source to a majority of voters over the past few years, but after they called Joe Biden “President-Elect” on Nov. 7, many Trump supporters began to spread “#DUMPFOXNEWS,” “#FAKEFOXNEWS,” “#FOXNEWSISDEAD,” and multiple other hashtags all over social media apps. After being trapped in a filter bubble with the Trump administration encouraging conspiracy theories such as QAnon and fake news sources, supporters have become accustomed to online extremist right-wing ideas, and sensitized to real-world politics. In order to combat the reaching levels of intolerance, it’s crucial to step out of one’s own filter bubble and regularly engage with those who hold differing views.

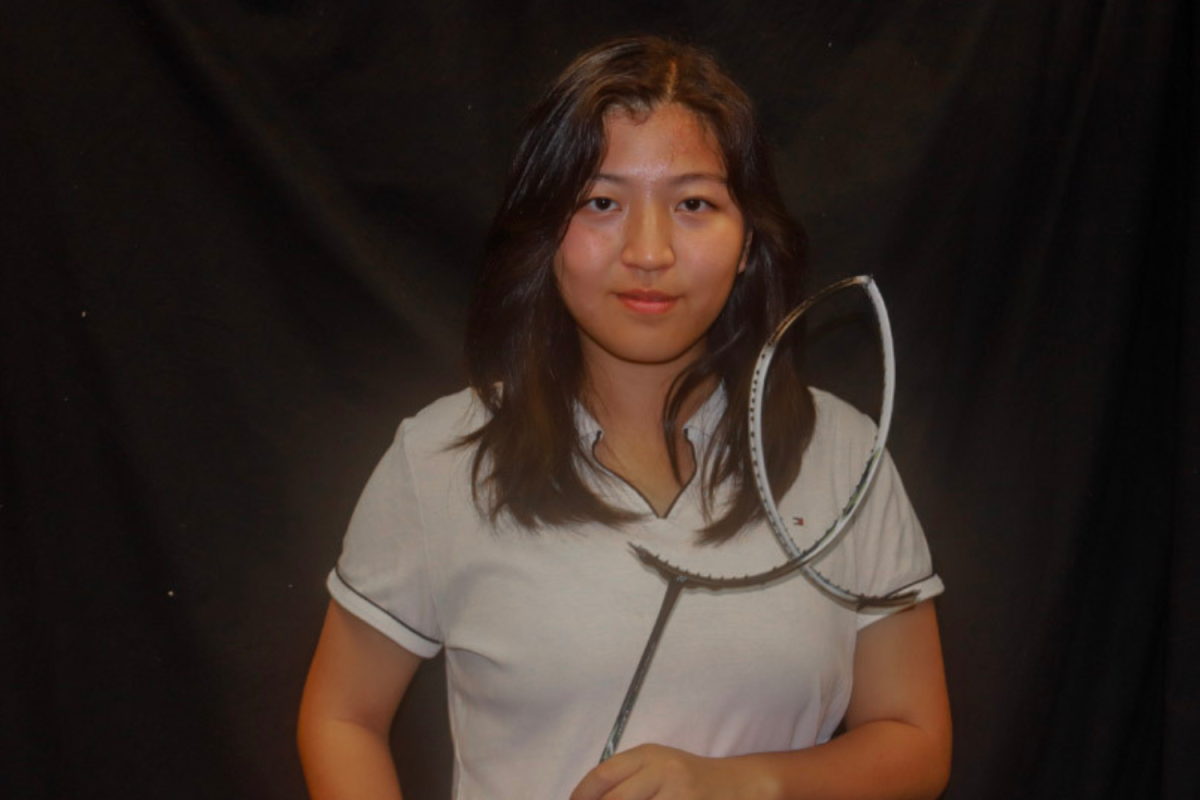

“Tolerance is important to an extent, we should be able to get along with people with slightly conflicting political opinions in school when we’re with each other,” said junior Claire Lin. “But if they’re a fascist or a neo nazi, they should be expelled. You should not be tolerant of these kinds of ideologies.”

There’s no doubt that social media played an instrumental role in this oppositional climate. Since the filter bubble algorithm is being designed to weed out differing views, algorithms curate users’ feeds to show only those who support the same world views.

“We have opened a Pandora’s box with technology, now we just have to learn how to live with it”, said Dr. Bacsjy.

While it may be uncomfortable, interacting with dissenting views is necessary for personal growth and a functional society. It’s important, if not required, for our future to actively avoid falling into the filter bubble cycle.