Google’s Project Nightingale infringes on privacy

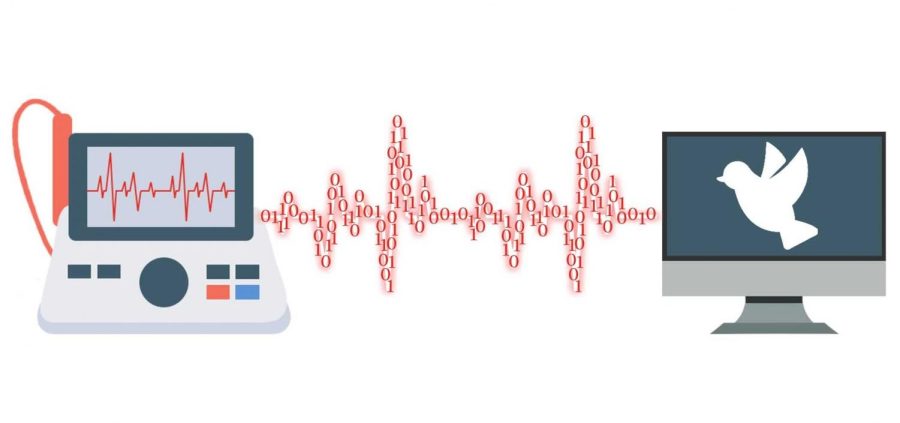

Project Nightingale allows Google to harvest the medical data of 50 million Ascension Healthcare patients across 21 states and upload it to a cloud database without their consent.

December 11, 2019

Tech giant Google has broken the mold with its data practices, and its latest brainchild, Project Nightingale, is no exception. With Project Nightingale, a trip to the doctor’s office becomes a statistic in Google’s database — a person’s life unwittingly condensed into numbers on a screen, and a violation of basic human rights.

Fittingly deriving its name from that of Florence Nightingale, a trailblazer in modern nursing and medicine, Project Nightingale is a health data-harvesting venture conducted by Google and its partner Ascension Healthcare, the second-largest healthcare provider in the U.S., Nightingale aims to use artificial intelligence and machine learning to develop effective medical treatment plans for each patient. The project allows Google to harvest the medical data of 50 million Ascension patients across 21 states and upload it to a cloud database without their consent, underscoring the need for updated digital privacy legislation. Though Nightingale aims to improve medical treatments through the power of computers, the project’s ends do not justify Google’s means.

This project highlights a long-standing ethical concern with data collection practices. Patients typically assume that their medical information is confidential, but Project Nightingale betrays this trust. Furthermore, the fact that Google harvests this information without patients’ knowledge begs the question: what other “confidential” information do more than 150 Google employees now have access to?

“Google gives people the illusion of control over personal data, but makes it in fact difficult for them to effectively make choices leading to more privacy protections,” said Nicolo Zingales, associate professor at Leeds Law School.

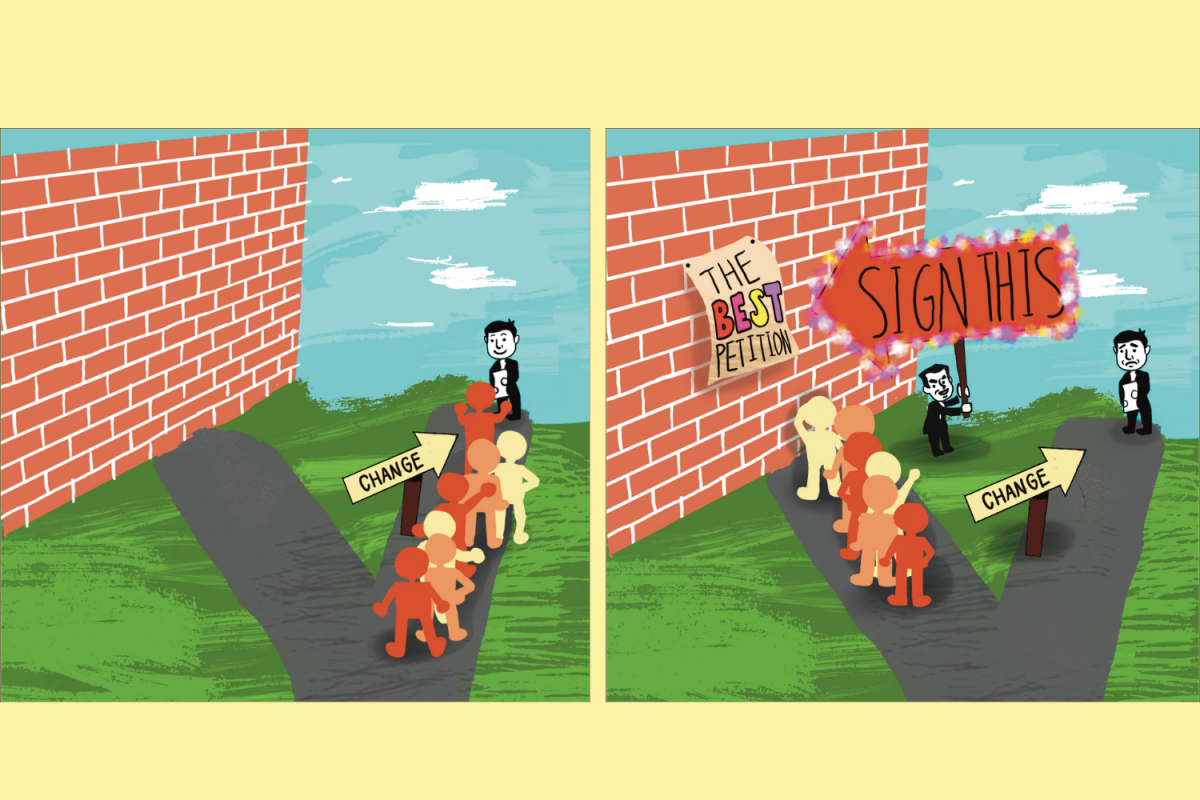

More concerning is the potential for Nightingale to set a precedent for data harvesting practices in the future. Other companies may follow Google’s example, and confidentiality may soon become a relic of the past as the world progresses into an era of entirely online data.

While there is some legislation regarding these practices, there is a clear lack of restrictions for medical data in the technological age.

“The unique sensitive nature of medical information warrants greater scrutiny and regulations which address the risks posed to individuals by this data being used by people who are looking at it for one purpose and converting it to something else,” said Al Gidari, Professor and Consulting Director of Privacy at Stanford Law School’s Center for Internet and Society. “What we are really talking about is if we should have rules that limit third-party access to data that has already been collected. I would like to have more clarity regarding how, when, and where this data can be used and how it is audited.”

Google claims that it adheres to the guidelines set by the Health Insurance Portability and Accountability Act (HIPAA), which protects such data, but Ascension employees have expressed concerns regarding the project’s compliance with HIPAA. Additionally, HIPAA was written and signed into law in 1996, when legislators could not have foreseen the extent of Google’s massive online data harvesting.

“I don’t think the general public knows the full extent of the use of their medical data, as consent given for medical purposes typically enables the processing of data for purposes that go beyond the specific treatment of the individual in question,” Zingales said. “Unfortunately, to a large extent this is due to the extraction of broad consent combined with a vague purpose specification, which may well be in violation of several data privacy laws.”

A large concern is that such data is inadequately protected; generally, data is encrypted on the front-end — the user interface — and back-end — the server — but it is not encrypted in between, where it is stored, making third-party access concerningly easy.

Notably, the lack of adequate enforcement of laws surrounding such practices has allowed other companies and third-party organizations to gain access to personal data.

“Despite a growing awareness of the range of existing data practices, we have a problem of enforcement,” Zingales said. “I am concerned that enforcement will not be effective without closer oversight of what happens ‘under the hood.’ I think that we need more resources for regulators and a global and holistic coordination between authorities investigating data practices. The enactment of comprehensive data privacy legislation in the U.S. would be a very welcome step in that regard.”

Ethical data harvesting alternatives do exist and have been put into practice; for example, the University of California (UC) system makes sure to obtain patients’ knowledge and consent before collecting their data from its affiliated hospitals. Furthermore, the UC system ensures patients are aware of where their information is going and what is being done with it. The success of policies that respect patients’ privacy proves that Nightingale’s infringement is not only unethical; it is unnecessary.

“At the front end, the medical services collecting the data should not have done what they did without having patients’ consent,” Gidari said. “It is better that individuals have the option to participate. There should be an opt-in system through which people have the right to choose whether their data can be used for that purpose.”

Minnesota Democratic Senator Amy Klobuchar, who has passed vast amounts of legislation in the field of medical data, has affirmed that Project Nightingale underscores a necessity for further legislation and that the nation’s lawmakers must do better to protect citizens’ privacy.

“Having patients’ permission is a matter of safety in terms of privacy and where they do not want their information to go,” said Emily Mao, a junior and Good Samaritan Hospital volunteer. “I can get access to, for example, which department a patient is in, but I do not have access to the patients’ whole records, which I feel that only doctors and nurses should have access to. If you do not know where your information is going, it is even more concerning that the company handling it is Google because Google has such a big network.”

Nightingale’s data collection practices constitute a blatant violation of patients’ rights. The project highlights, more than ever, the necessity for new data regulations. Given the current circumstances, though, patients must be aware of the risks to their own privacy and make an effort to protect their personal data.