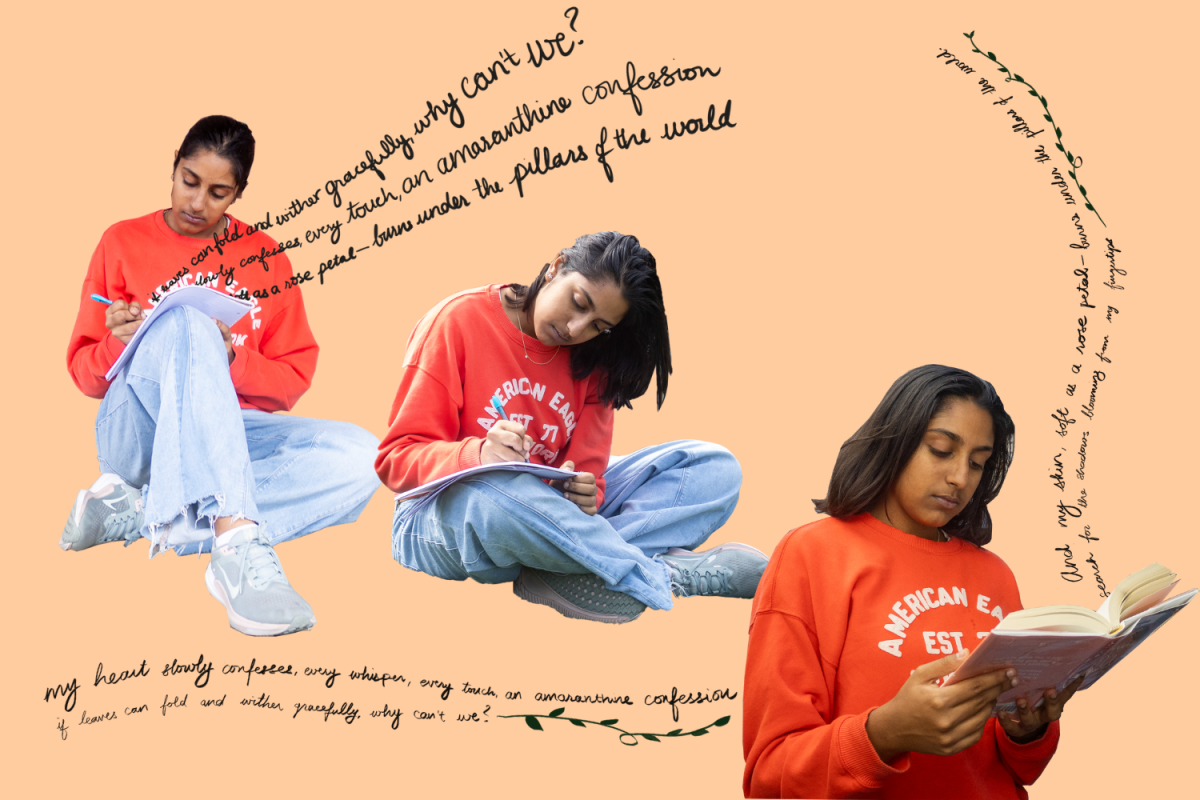

Hunched around a small glowing screen amid a nest of blankets strewn across her bed, junior Amolika Sudhir frantically refreshes Instagram as the app fails to load for the fourth time in a row. Frenzied fingers tapping against her phone case and nerves brimming with anxiety, Sudhir sighs with relief as the teal graphics finally appear, signifying the launch of the Share-On website.

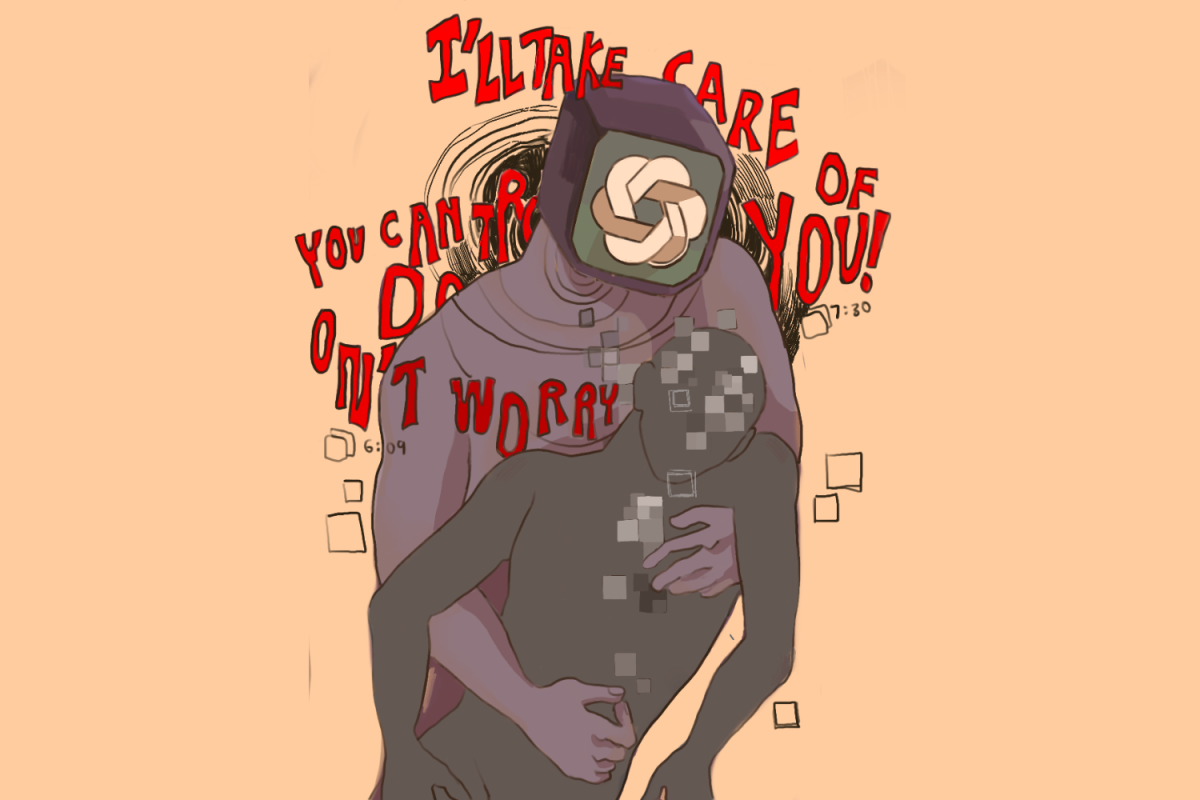

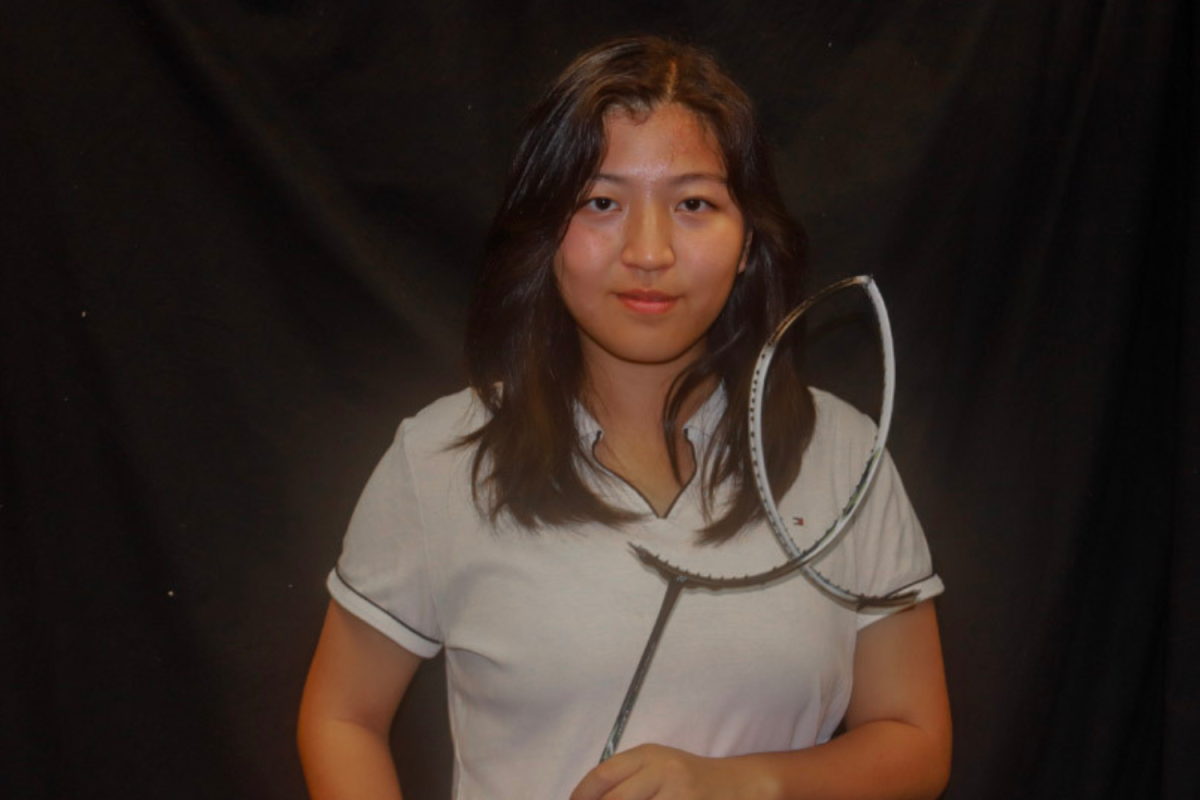

On Aug. 10, Sudhir and junior Sharon Lu debuted their AI chatbot aimed to support mental health with their non-profit organization Share-On. For students searching for support, Lu and Sudhir hoped to provide an anonymous and non-judgmental space to discuss anxieties and insecurities. The chatbot experienced immediate success with over 60 queries in the first four days.

“It’s such an extensive process to get professional help, and if you’re someone that doesn’t really like talking to people, it can be a big obstacle,” founding CEO Sharon Lu said. “So we’ve made something that’s accessible and easy to use.”

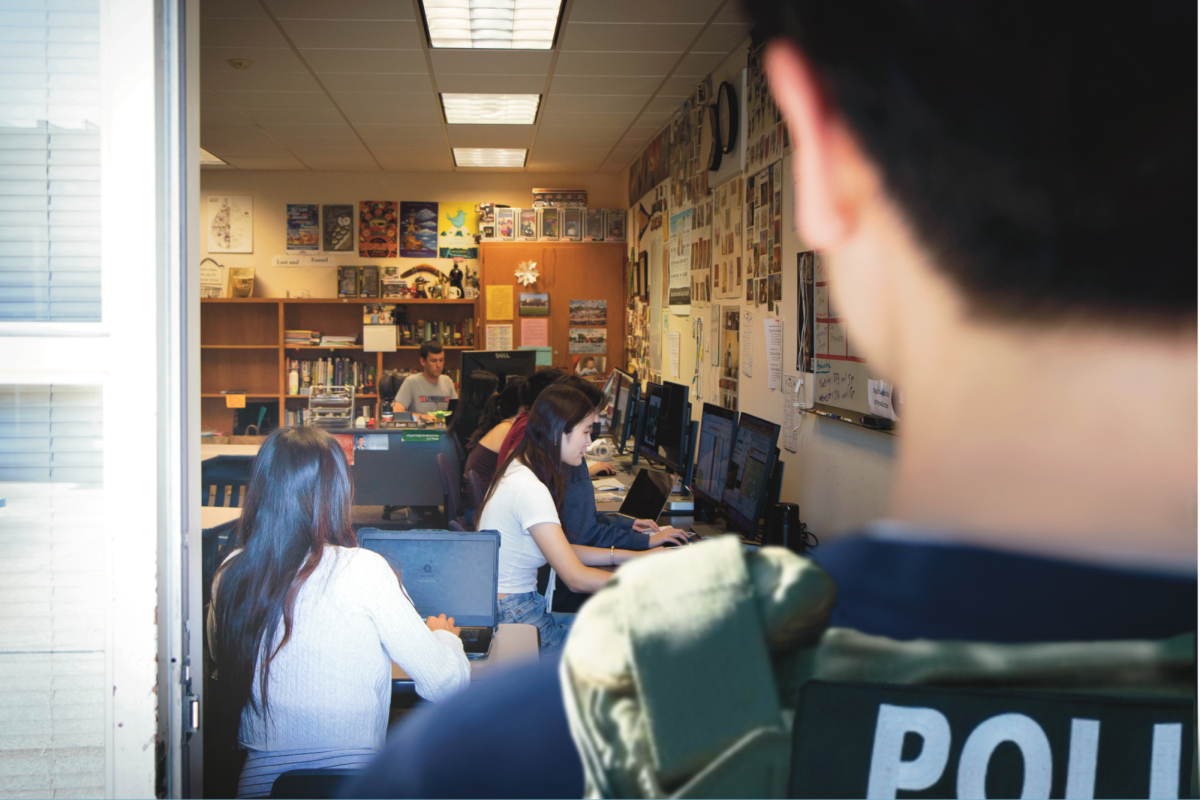

Earlier in her freshman year, Lu saw the potential role AI technology could play in creating a chatbot that could effectively communicate with struggling teenagers. Once she met Sudhir in their sophomore year, the two came together to make their vision a reality. After the team secured their initial $500 funding by entering in a pitch competition hosted by the Bay Area student run Business Entrepreneurship Encouragement Program, they were able to get the jumpstart they needed to fund their model. They assembled a team of tech developers, public relations officers and a chief marketing officer.

“Making a chatbot of this scale, even for our most experienced developers, came with problems, since AI is pretty new technology,” founding CTO Amolika Sudhir said. “It took a lot of trial and error — being willing to keep going back and trying out different kinds of code and algorithms to see what works and what doesn’t.”

For instance, the team experimented with the chatbot’s response to self-harm prompts until they consistently received appropriate replies urging the user to seek professional help. The chatbot was trained on data from professional organizations and studies revolving around mental health, such as guides created by supportlinc, National Alliance on Mental Illness and Mental Health Literacy, to ensure it was not learning from the wide variety of misinformation on the internet like many other chatbots.

Going into this project, Lu envisioned a resource specifically beneficial for her community. After previously dealing with mental health issues herself, she believed that there weren’t sufficient resources to help people similar to her through these problems.

“At the time, I just sort of believed that mental health was something that I was making up,” Lu said. “I wasn’t able to open up about it. Not being able to communicate or get the help that I needed was a really big struggle for me.”

During the pandemic, she heard from many of her peers who shared that they were going through similar struggles.

“No one really felt the ability to talk about their mental health issues, so everyone just kept it hidden and had this struggle inside of them,” Lu said.

Lu believed that mental health stigmas and lack of communication have prevented teens from finding sufficient help. According to a survey conducted by Share-On of 51 Bay Area students, aged 13-18, 84% of students found it moderately to extremely difficult to talk to people. In order to enhance the friendly tone to make teenagers feel more comfortable, Share-On incorporated common slang and emojis into the chatbot’s lexicon.

After their launch, Lu and Sudhir had plans to further develop their non-profit by hosting events that raise awareness about mental health and promoting the chatbot to a wider audience.

“We’re starting with a target audience of people within FUHSD, but we hope to expand to the entire Bay Area,” Sudhir said. “We do hope to be something that becomes a staple resource.”

All conversations users have with the chatbot, as well as all data Share-On collects, remain anonymous. The only data from user conversations that the team sees are the most common words, phrases and themes used while messaging with the chatbot after transcripts are filtered through Mongodb, the platform they use to organize their data. They plan to use these findings to advocate for the support that students need the most.

For example, according to Lu, the team might notice data from the chatbot suggesting that there is a significant pattern of students commenting about a specific issue, such as sleep deprivation, notably impacting their lives and mental health. In this case, Share-On would use the data collected from the chatbot to push initiatives advocating for schools to introduce strategies that will support students with that specific issue, such as allocated time to rest during school hours or later start times.

Share-On hopes to collaborate with other organizations such as Justice4All in order to make further change for their peers in the mental health industry.

Share-On’s Lynbrook ambassador Akhil Nimmagadda predicted that the program would do well among Lynbrook students, as well as align with the team’s hopes to reach a larger audience.

“At Lynbrook, a lot of people believe, ‘Oh, I’m better than [seeking out support]. I go to Lynbrook, I can handle all the academic pressure,’” Nimmagadda said. “But some people actually do need the help, and they do better with help.”

As the team looks forward to the future of the non-profit, they hope to make a real difference for their peers and fellow teens struggling with mental health.

“Even as our generation grows out of the teen stage, for the future generation of teens, mental health is always going to be a prevalent issue — no matter how hard people try to reduce it,” Lu said. “How these issues are approached is still often from the perspective of adults choosing what’s best for teens. Our aim is to provide a resource for teens that will be accessible anywhere and anytime they can feel comfortable using.”