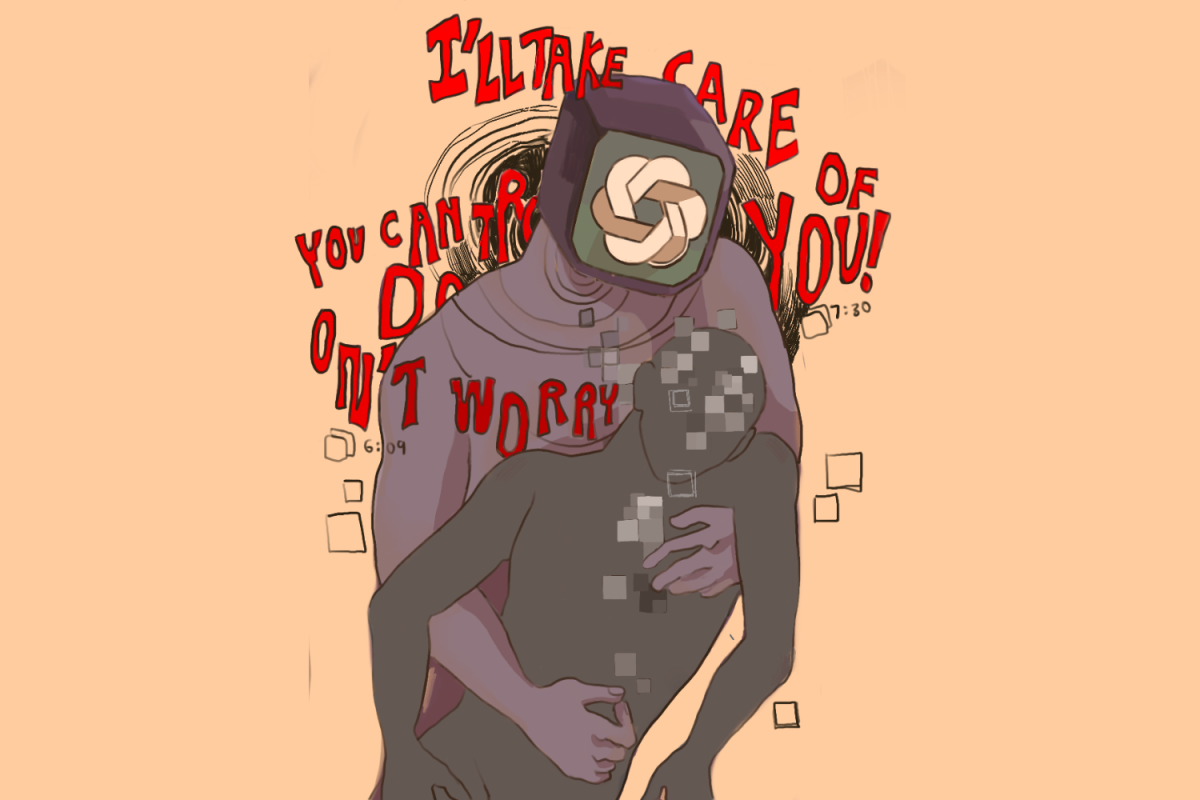

People often navigate websites and applications seamlessly, unaware of the subtle tactics employed by website makers to influence user decisions. They enter the world of “dark patterns” — a term coined by user experience specialist Harry Brignull in 2010 to describe deceptive design techniques, such as disguised ads and hidden costs, crafted to manipulate user behavior, “making you do things you didn’t mean to.” While these patterns have long been recognized for their impact on user experience, their connection to data privacy is a particularly pressing concern in today’s digital age.

These widespread patterns often exploit cognitive biases, taking advantage of users’ psychological vulnerabilities. By prioritizing the goals of the designer or company over a user’s needs or preferences, dark patterns often lead to feelings of manipulation or frustration among users. Examples include misleading prompts, forced consent and intentionally confusing interfaces.

A common example of dark patterns is seen in Internet cookie dialogs. Cookies are small pieces of data stored on a user’s device by websites they visit, often used for various legitimate purposes such as improving user experience or remembering login information. However, some websites exploit cookies in deceptive ways to track user behavior without their explicit consent or knowledge.

“Dark patterns not only compromise user trust but also erode the foundation of ethical design,” Toptal Clients product designer Michael Craig said. “As product designers, it’s our responsibility to prioritize user empowerment and transparency over manipulative tactics.”

For instance, some websites make it challenging for users to opt out of cookie tracking by burying the option deep within privacy settings, making it difficult for users to maintain control over their personal information. Cookies allow users a personalized experience, where they are presented with personalized advertisements or content based on their browsing history. These ads often create a feeling of being watched by discreetly collecting user data.

Amid the landscape of dark patterns, misleading prompts stand out as one of the most cunning tactics. These deceptive messages or notifications are carefully crafted to steer users toward actions or decisions that don’t align with their best interests. A website might display a prompt suggesting that clicking a button will lead to a desired outcome, when in reality, it performs a different action. For example, many websites implore audiences to click on premium options without disclosing any fees at first sight, like a hidden premium cost that encourages the user to pay for a certain feature or premium option.

Dark patterns have immense power over users’ online behavior and decision-making — and are especially deceitful when they coerce consumers into divulging more personal information than they plan to. Privacy Zuckering, named after Meta CEO Mark Zuckerberg, refers to the deceptive practice of manipulating user privacy settings or consent mechanisms to coax users into sharing more personal information than they intended.

Dark patterns can be deemed illegal when they breach consumer protection laws. For example, false advertising, where dark patterns mislead users about product features or pricing, potentially violate laws against deceptive advertising. Additionally, dark patterns that coerce users into disclosing personal information or consenting to data collection without clear disclosure may violate data protection laws like General Data Protection Regulation, which states that all seven of its major data protection principles are followed by all data controllers, or the California Consumer Privacy Act, which creates an array of consumer privacy rights and business obligations regarding the collection and sale of personal information.

“According to the Free Software Movement, software that is not freely released to the public unjustly puts the developer in a position of power over the user,” senior Patrick Huang said. “Policy makers should proactively disallow developers from restricting, misleading and harming users through their software.”

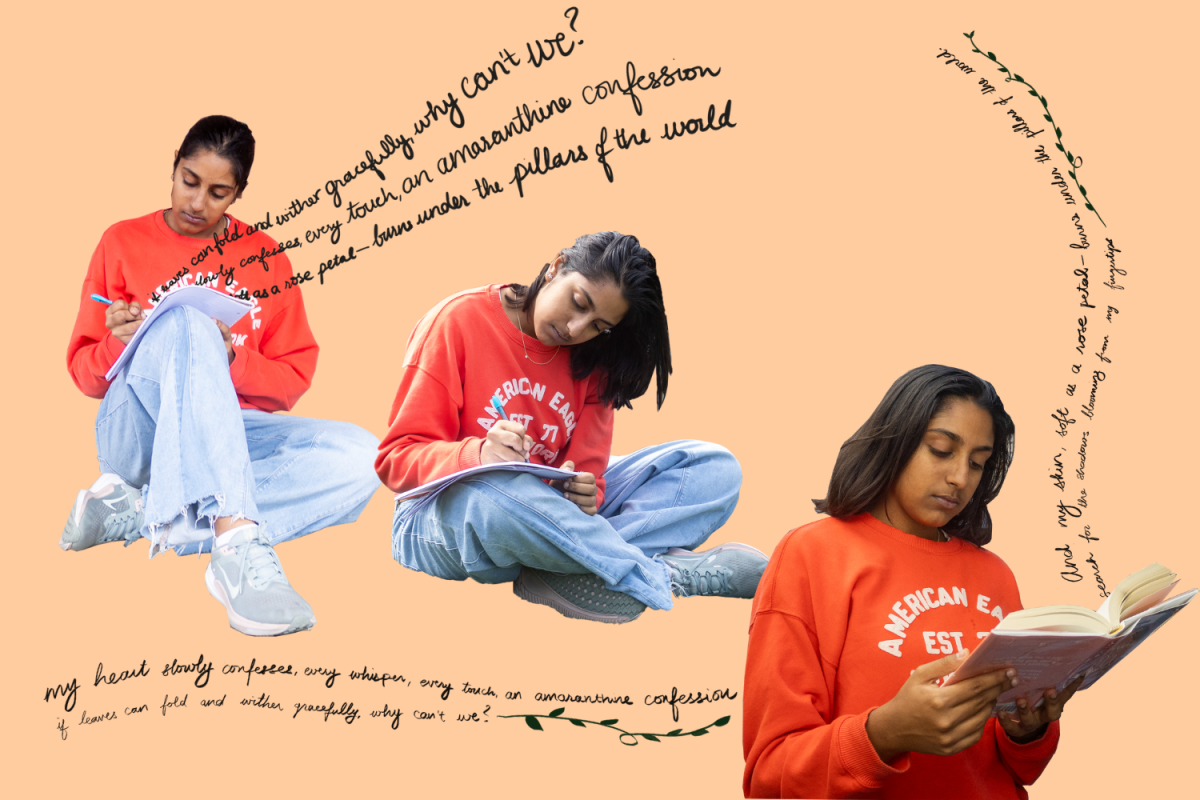

With the aim to avoid misleading prompts, the California Privacy Rights Act came into effect on Jan. 1, 2023, which banned the use of deceptive tactics such as disguised advertisements, misleading prompts and hidden fees, thus striving to ensure greater transparency and accountability in digital interactions. They defined dark patterns as “a user interface designed or manipulated with the substantial effect of subverting or impairing user autonomy, decision-making or choice.”

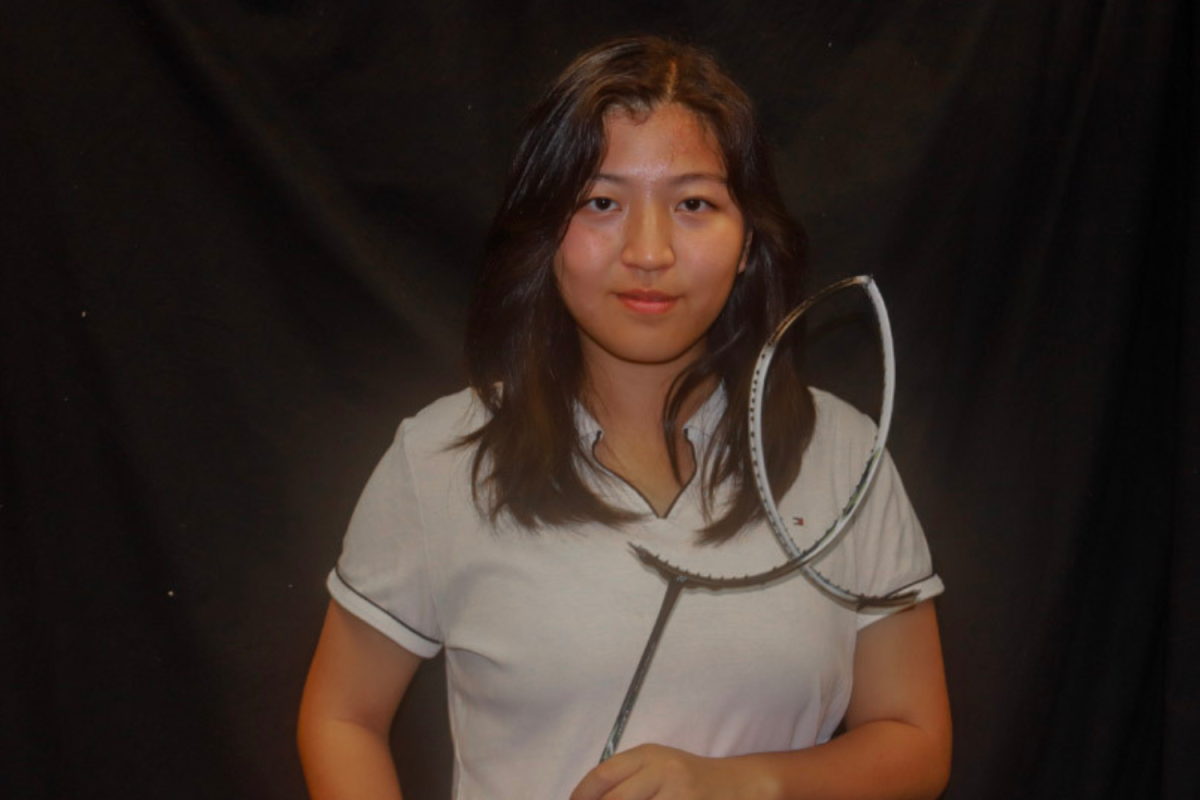

“I have definitely fallen prey to dark patterns before,” senior Anish Lakkapragadda said. “Even actions as small as closing a pop-up ad is often difficult, because the close button is hidden by the designer.”

Dark patterns on social media and other websites exploit fundamental aspects of psychology to influence user behavior. By preying on concepts like loss aversion, social proof and operant conditioning, these platforms create addictive behaviors that prioritize engagement over users’ well-being and privacy.

Loss aversion is when individuals prefer avoiding losses over acquiring equivalent gains. A common example of this is subscription-based services that offer free trials. Social proof is where individuals look to others to determine what is appropriate or acceptable behavior in a particular context. Lastly, operant conditioning focuses on how voluntary behaviors are strengthened or weakened by their consequences. The most apparent example is seen through notification. Users receive variable and unpredictable rewards in the form of likes, comments or notifications.

“Our designs should serve to enhance the user experience, not exploit vulnerabilities,” Craig said. “Let’s strive to build products that foster trust, respect and integrity.”