Within the last decade, there has been a burgeoning demand for incorporating artificial intelligence in everyday tasks. Due to its growing prevalence in online advertising, healthcare and various algorithms, it is increasingly important that people be aware of the unavoidable bias in AI.

“Algorithms have improved over the years and the computational power of machines is stronger than ever,” computer science teacher Bradley Fulk said. “This means we can do things with AI that we couldn’t before.”

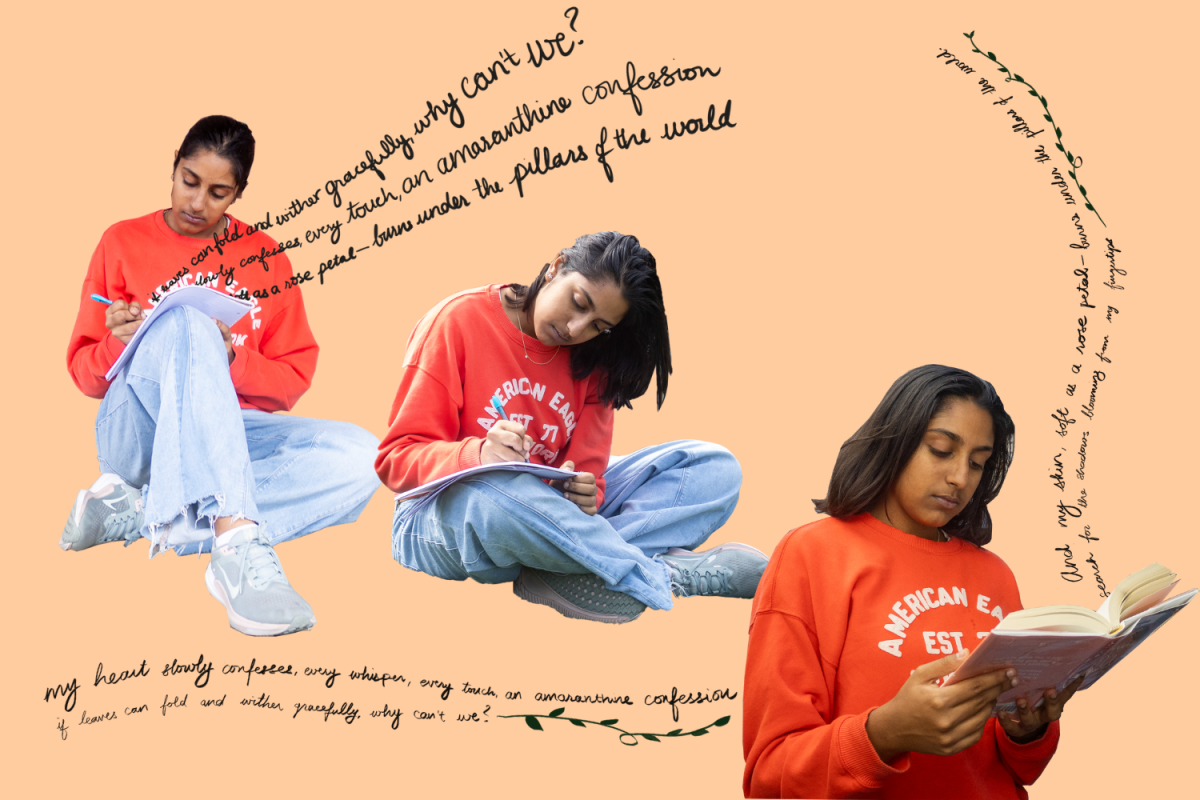

Considering that human developers select the data that algorithms are trained on, it is inevitable for unconscious bias to surface. All humans have personal biases and stereotypes instilled in them, influencing their choices and judgments. The mere resolve for developers to use unbiased datasets is insufficient to combat the issue of AI bias. The very definition of “fair” varies from programmer to programmer, and there are over 30 distinct mathematical definitions of fairness. As a result, there is no baseline or universal guideline for programmers to determine whether their algorithms are fair or not. Programmers most often curate datasets they believe to be representative of the society around them. Therefore, it is crucial that creators familiarize themselves with a diverse demographic to minimize bias. Unbiased surveys that don’t suffer from undercoverage of minority groups and are directed toward understanding how people of these demographics view the product are beneficial to combat bias and should be implemented in the code development process.

Similar to the employment of skewed data sets, insufficient training data and a lack of product testing are also large contributing factors to biases. In Apple’s face recognition algorithm on iPhones, most of the images the model was trained on consisted of white males, which means that it recognizes individuals who fit that description far more accurately. According to WIRED, it is far less capable to accurately recognize faces from people in minority groups, specifically women and people of color. Hence, widespread companies such as Apple must extensively test their products to ensure these biases do not arise in their products.

Another instance of AI bias was when Amazon’s algorithm unintentionally discriminated against women in resumes. A since-discontinued hiring algorithm was used to scan resumes, and since it was trained on applications over a ten-year period and a majority of the resumes were from males, it inadvertently taught itself to favor male candidates. The algorithm gave candidates scores from one to five and presented these scores to hiring managers. Unfortunately, the algorithm would usually rate what they suspected to be women’s applications lower. This incident highlights the unpredictable evolution of these machine learning programs and the resulting necessity of the public to be cautious and understand that biases are perpetual.

“We don’t know what these models are capable of,” Machine Learning President Anish Lakkapragada said. “For things like these newer technologies, it’s important for us to treat them with caution.”

Racial discrimination in AI is regrettably prominent, and it was highlighted in the Correctional Offender Management Profiling For Alternative Sanctions. COMPAS, an algorithm used by many judges for determining the likelihood of a criminal committing a reoffense, was caught in heavy backlash. Studies showed that the algorithm incorrectly flagged African Americans as potential criminals around twice the amount of times they mistakenly flagged Caucasian individuals. It was found that the data they fed the program was skewed, and it imposed black offenders with a higher baseline regarding reoffense rates. As a result, incarcerated African American citizens received erroneously high risk assessment scores. This influenced the judges’ unconscious perceptions of the accused individuals which resulted in many wrongful convictions. Since AI is actively being integrated into the justice system, this incident only further emphasizes the urgency to confront and prevent AI bias.

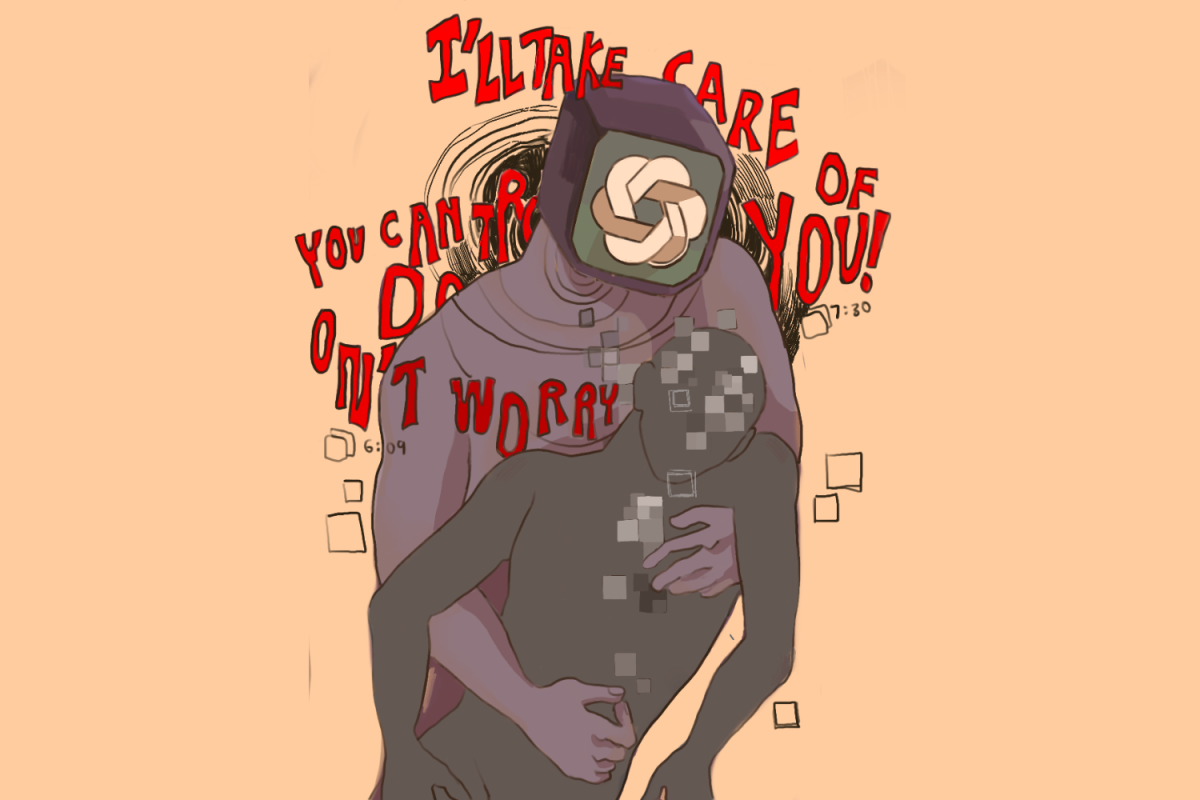

It is true that AI algorithms are more effective in reducing bias compared to humans since it is prone to neither human emotion nor volatility. However, it is evidently not a foolproof method to eliminate bias. Instead, algorithms can provide the impression that they are completely fair, yet covertly contain hidden biases that the general public experience the consequences of. As revealed through the incidences of AI bias that occurred across different situations, the current research and knowledge regarding AI and its capabilities are not sufficient for humans to rely on AI without caution.

“The biggest thing that I would point out to people is that AI is all very new,” Fulk said. “I would tell anyone to treat anything to do with machine learning with caution. It is not always going to be accurate.”