Facebook privacy breaches highlight flaws in U.S. legal system

Facebook privacy breaches highlight flaws in U.S. legal system.

March 1, 2019

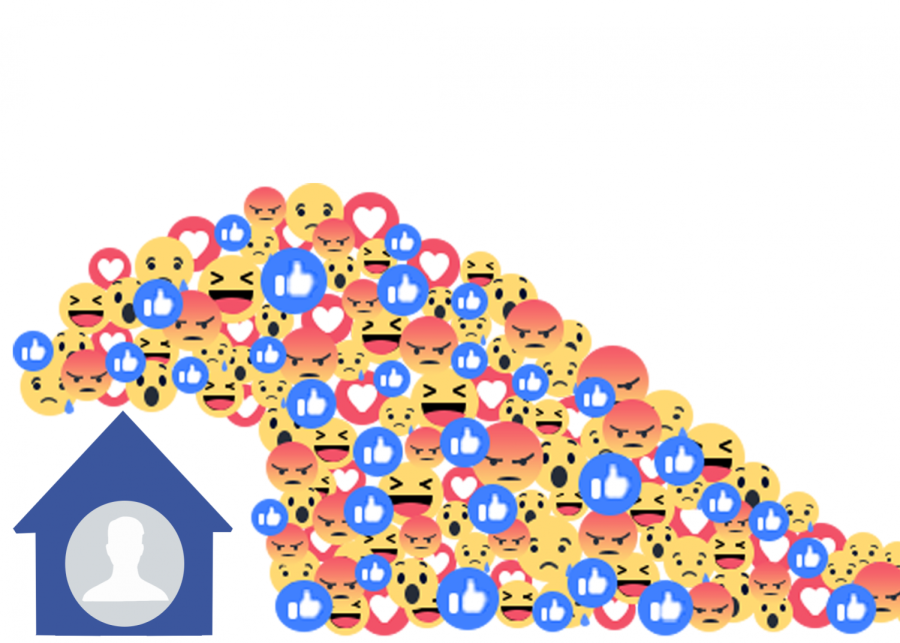

Big Brother, the omnipotent being who is constantly watching those living in the dystopian world of George Orwell’s novel “1984,” may be fictional, but the novel’s premise has recently hit home amid reports of social media giant Facebook’s privacy breaches, which date back to its inception in 2004. Yet, although Facebook has failed millions of users in the United States, U.S. laws have also fallen short of their role in protecting citizens’ privacy. It is time that both Facebook and lawmakers are held accountable for their actions.

“Facebook has been irresponsible at best and reckless at worst with how it has treated its users’ information,” said Jennifer King, the Director of Consumer Privacy at Stanford Law School’s Center for Internet and Society. “It is clear from many of the strategic choices the company made from 2009 to -2015 that it was focused on growth by all means necessary, without much regard to how this would affect its users, especially when third parties misused Facebook users’ data.”

In an incident in April 2010, Open Graph, a Facebook platform that allowed third party developers to access users’ personal data, such as their name, location and online chats, was launched; moreover, Open Graph allowed these third parties to access users’ Facebook friends’ data as well. Many users were unaware of the extent to which their personal information was being used by this platform.

“There are proposals for federal level privacy legislation afoot, but it’s unclear exactly how they may address the precise issues that we’ve seen with Facebook,” King said.

In another instance, a mood-manipulation experiment conducted by Facebook in 2014 altered the Facebook news feeds of 689,000 users to show either negative or positive posts and then tracked the users’ online responses to determine how they were affected by the emotionally-skewed posts. This intrusive experiment was conducted without users’ knowledge and could be the beginning of a succession of such experiments. Despite this incident being an obvious ethical violation of users’ privacy, there has been no clear legislation on this matter to prevent similar situations from occurring in the future.

“There are proposals for federal level privacy legislation afoot, but it’s unclear exactly how they may address the precise issues that we’ve seen with Facebook,” King said.

In another investigation, the Guardian and The New York Times revealed in March 2018 that Facebook had been providing Cambridge Analytica, a political consulting firm, the data of millions of Facebook users without users’ consent, unearthing a massive data scandal. In 2013, nearly 300,000 users took a psychological quiz through “thisisyourdigitallife,” an app created by Cambridge Analytica academic Aleksandr Kogan, which harvested the profile data of Facebook users and their friends without their explicit permission, all with Facebook’s knowledge.

Then in 2014, a rule change was made to limit developers’ access to user data and forced developers to ask for users’ permission before giving access to said data; however, the rule change was not retroactive and therefore left improperly-acquired data in the hands of Kogan and Open Graph. In these incidents, Facebook had a duty to acquire users’ consent before giving their data to third-parties and to maintain transparency in what they and these third-parties were doing with said data, both of which they failed to do.

“I don’t necessarily have a problem with the data being used,” said Computer Science teacher Brad Fulk. “I have a problem with the fact that it was being used without people knowing, because if that floodgate of information is left open, then any other personal information could fall under the same umbrella. If one company is allowed to do it, who knows how many other companies are using the same data and for what purpose?”

Prior to the 2016 presidential election, Cambridge Analytica was again found to have meddled in people’s psychological data, acquired through Facebook, when helping the campaigns of presidential candidates Ted Cruz and Donald Trump without letting Facebook users know. When the aforementioned privacy violation allegations finally came under fire in March 2018, Cambridge Analytica went as far as to deny that Kogan’s data had been used in connection to Trump’s campaign.

Contrary to Cambridge Analytica claims, it was revealed in March 2018 that Facebook knew about the massive data theft and did nothing, but it did not face legal consequences because most states, with the exception of some such as California, lack laws that require immediate disclosure of security breaches. This is a major gap in U.S. data protection legislation that needs to be filled; even though actions were taken through investigations, laws still need to be created to better prevent incidents like these.

“To date, federal law regarding privacy has been mostly focused on specific sectors, such as health privacy and student privacy. States have been left to fill in the gaps,” said Riana Pfefferkorn, the Associate Director of Surveillance and Cybersecurity at the Stanford Center for Internet and Society. “But it is a significant challenge for companies to try to comply with a patchwork of dozens of state laws, which may have inconsistent, or even conflicting, requirements. A single federal standard that provides a baseline of privacy protection would provide consistency both for us as individuals and for companies trying to run their businesses in compliance with the law.”

There is no telling exactly how many people in the U.S. have been affected by Facebook’s privacy violations and to what extent, but what can be said from these incidents is that technology and privacy laws in the U.S. need to be reconsidered; they must be changed to act retroactively, and regulations on data use must be tighter.

“It is time to enact comprehensive federal-level privacy legislation that will regulate how companies such as Facebook are permitted to collect, use, keep, store, and share information about people,” Pfefferkorn said.

Other countries, such as those in Europe, for instance, have stricter privacy laws and regulations that the U.S. government and lawmakers ought to emulate.

For example, the European data protection law General Data Protection Regulation (GDPR), which took effect in May 2018, regulates the way companies store information and requires them to disclose a breach within 72 hours. U.S. policy makers have not adopted a law akin to the GDPR; American data regulation laws are more focused around regulating the integrity of commercial data, while the European Union (EU) has put individual rights before the interest of businesses; companies are held liable under EU law and will be subject to repercussions if they fail to protect subjects’ data.

“The GDPR in Europe has raised a lot of visibility about the consequences of being reckless with customer data,” King said. “However, I think we still need to see more regulation to make an effect there.”

Additionally, European privacy laws encompass one major point that U.S. laws lack: retroactivity. In February 2018, a Belgian court ordered Facebook to stop collecting users’ online data after it was found to have insufficiently notified the Belgian government of its intent to do so. Not only was Facebook ordered to stop, but the company was also ordered to delete all previous data that it had collected or face a fine of up to €100M. Had U.S. laws acted retroactively, this would have had a major impact with regards to the incidents involving Open Graph, Aleksandr Kogan, and Cambridge Analytica.

“Regulation is always running behind technology and trying to catch up, so it will always be a challenge for lawmakers to regulate today for unforeseen new uses of online data that will happen tomorrow, but that does not make it not worth trying,” Pfefferkorn said.

Technology is advancing more rapidly than ever before, and it is lawmakers’ responsibility to hold companies like Facebook accountable by creating laws accordingly to protect citizens’ civil right to privacy, as countries of the EU have done. Facebook may not have been directly responsible for every one of these incidents, but it should have maintained transparency with users and asked for their consent. Additionally, as our world becomes increasingly digital, it is integral for lawmakers to protect the individual’s right to privacy in a platform that is becoming a necessity.