At what cost?

December 12, 2022

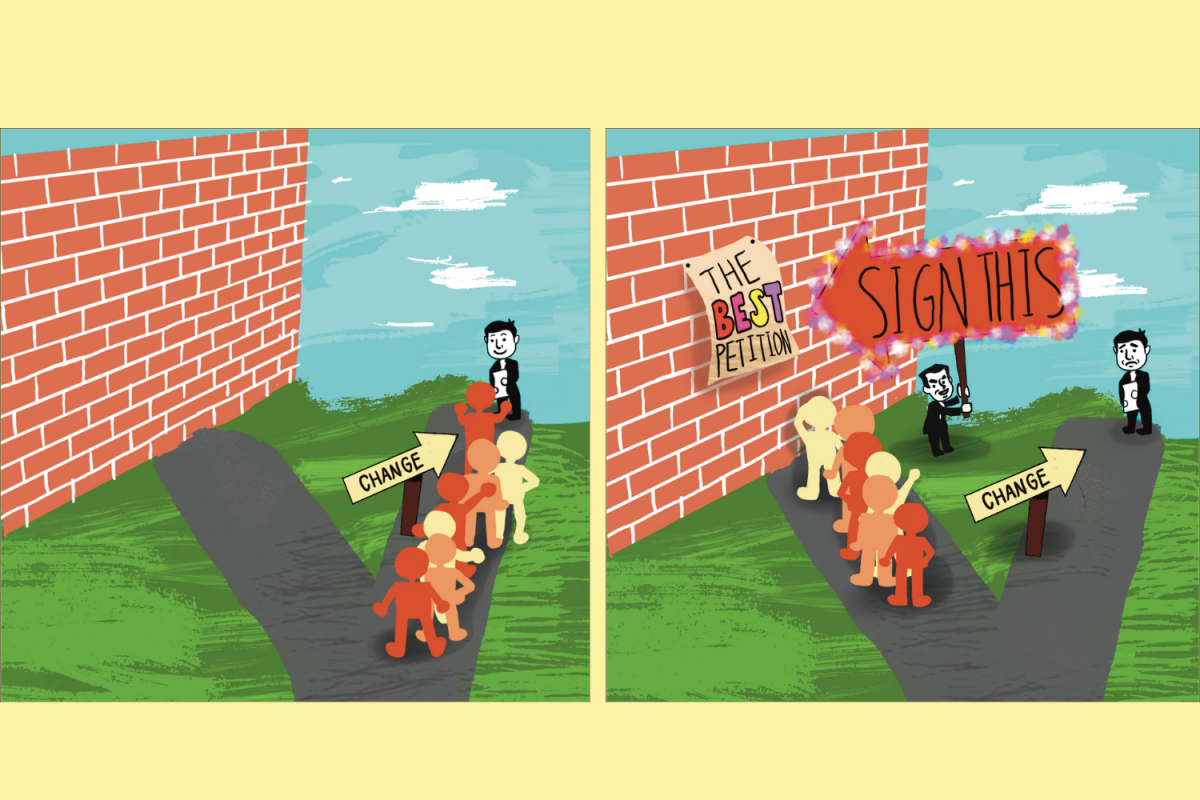

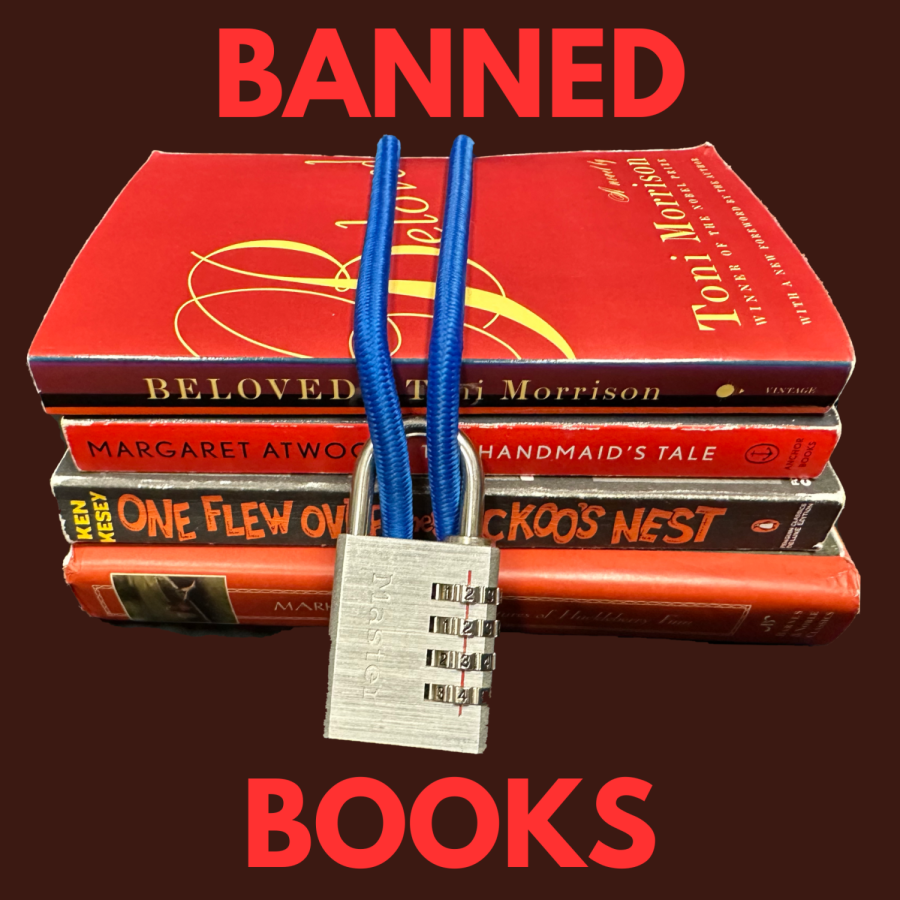

Freedom of speech is a cornerstone of American democracy. However, limitations in the case of harmful speech are vital to ensure safety. The marketplace of ideas, often touted as a core argument for defending freedom of speech, is a utopian fantasy rarely ever fostering a “level playing field.” Therefore, privatized information infrastructure and social platforms should continue to be allowed to adhere to their own regulations even if that means censoring certain types of speech.

“I think the marketplace of ideas is becoming more polarized and tribal,” said social studies teacher Mr. Williams. “I think that by consuming specific types of media that never challenges their point of view, it makes it hard for people to accept other perspectives.”

Free speech absolutist Elon Musk acquired Twitter in October, with a desire to revamp the content moderation system to be more lenient. In the aftermath of Musk’s takeover, use of gay slurs rose to 3,964 a day, and antisemetic posts rose by more than 61%, with accounts identifying as part of ISIS have come roaring back. The lack of hate speech policies have allowed bigoted people to express themselves rawly in the public eye, quickening the spread of dangerous views.

The First Amendment has a broad definition of freedom of speech and expression, which was acceptable in the 19th century, but is now becoming obsolete and in desperate need of revision. The current constitution protects hate speech as long as it does not incite violence. However, most hateful speech will eventually lead to violence. The modern standard of dangerous speech comes from Brandenburg v. Ohio (1969) and holds that only speech that directly incites lawless action can be prosecuted.

“Personally, I think especially on public platforms such as social media, there should be mechanisms to reduce hate speech,” said JSA President Shyon Ganguly. “Especially language that promotes objectively harmful ideologies such as white supremacy.”

In particular, the internet fosters hateful speech which is often underestimated. White supremacy groups traffic in racist tropes, and although may initially be nonviolent, build thriving communities of hate. Skewed framing of subjects drives extremists to violently attack public places. Dylan Roof, who killed nine African Americans in Charlston, S.C., actively participated in online white supremacy groups, where his views were acknowledged and validated. One could argue that hate speech doesn’t pull the trigger, but this doesn’t mean hate speech doesn’t create a climate where dangerous acts are more likely.

“I think in American history, before social media, there have always been crazy conspiracy theories and radical groups,” said Williams. “Now there is a larger forum for this information to get out there, due to social media.“

Since social media platforms are private companies and can regulate speech laws based on their own preferences, the idea of all users having a level playing field for the “marketplace of ideas” is practically impossible. As a private corporation, even having a biased algorithm is within the rights of Twitter. Research done by Twitter employee Luca Belli has even shown that the algorithm amplifies conservative tweets, meaning not each voice is heard equally. However, the cause as to why remains undetermined.

“The diversity of ideas that you generally see in your day to day life are not represented as well in government. Currently, it is difficult to represent the marketplace of ideas,” said Ganguly.

All speech is not equal. In today’s climate, it is evident that truth cannot always drive out lies, and in these conditions, it is necessary to revise the First Amendment and continue to enforce certain censorship laws to adhere to the modern state of the world.