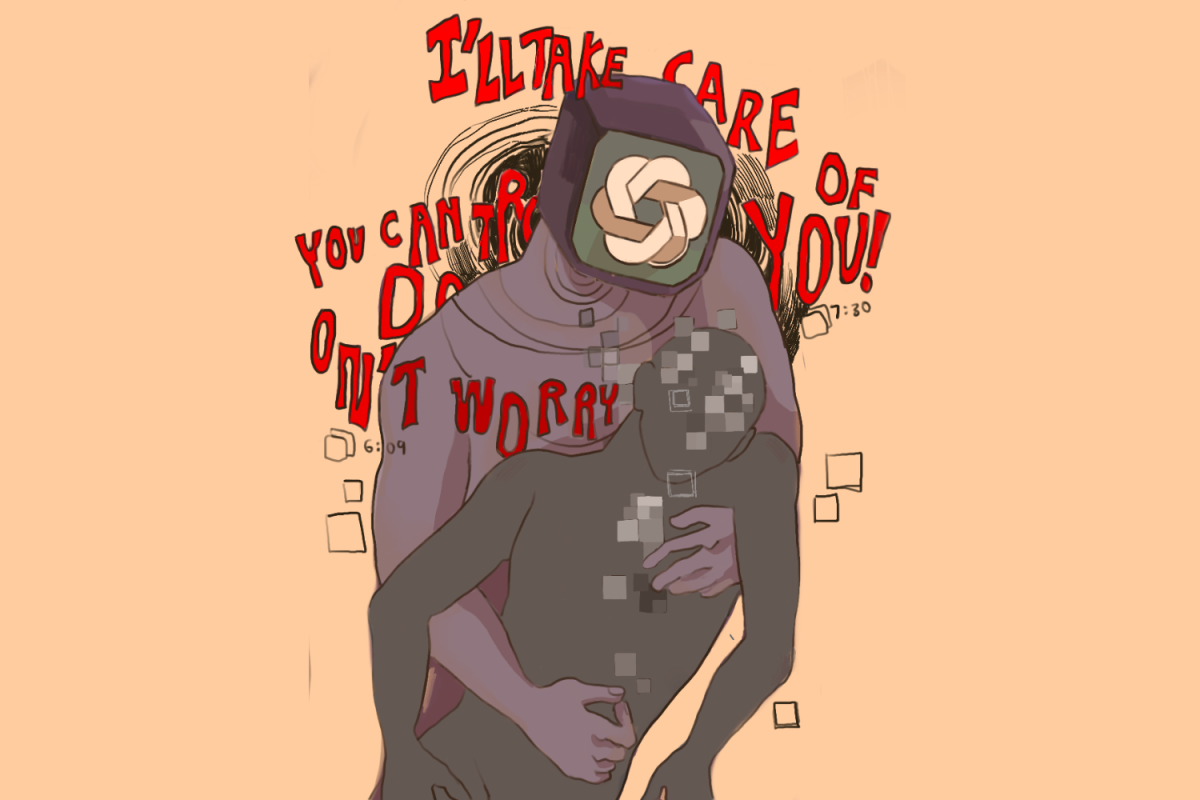

From doing homework to being a 24/7 companion, artificial intelligence chatbots can no longer be called just tools. Gradually, they are replacing human relationships and connections. Although chatbots may offer quick, convenient responses, they are poor substitutes for therapists and trusted individuals. The more people rely on chatbots for emotional support and therapy, the more they isolate themselves from genuine human connection, making it essential to build a supportive community to help them feel comfortable.

For students juggling extracurricular activities, AP classes and more, chatbots are easy tools to use. Unfortunately, what starts as study aid can all too easily turn into overreliance for all schoolwork and even simple tasks, eventually replacing tutors and help from friends with AI relationships.

“AI’s job is not to replace genuine human connection,” senior Aarit Parekh said. “I think that’s something that humans can only do, but for other tasks, such as advanced analysis, I think AI is going to be the future of all of these.”

Today, more students are turning to AI as a convenient alternative to therapy. Therapy is expensive; it can range anywhere from $65-$300 for a single session, and it can be difficult to open up about one’s struggles to a stranger. Conversely, chatbots such as ChatGPT and Gemini are a couple of clicks away, and can curate a personalized experience and respond without judging. But their accessibility doesn’t mean they are actually effective.

“It’s really accessible and it feels anonymous,” school-based psychologist Dr. Brittany Stevens said. “You want a response without judgement, which could lead people to seek support from AI because it is a real barrier for people to have to tell another human that they’re struggling. But one thing that AI cannot do right now is hear tone, which is a very powerful element of communication that makes the difference in how we respond to other people.”

Besides overlooking tone, AI therapists are not licensed; they lack the emotional insight and empathy that trained professionals have, and may provide false diagnoses. Especially in serious cases, such as when a user mentions criminal activity or suicide, AI cannot identify or contact authorities when necessary, failing to ensure user safety at critical moments.

AI companions can also create an echo chamber of validation. While positive reinforcement might seem supportive, it prevents users from challenging their perspectives and makes them dependent on seeking approval rather than building self-worth.

“Chatbots take down barriers very easily, where we can get instant responses and tons of validation,” school-based therapist Jenna Starnes said. “But that’s not how our real relationships work. We go into the real world, and we’re like, ‘This is awkward. How do I have a conversation? Wait, it’s not all about me. Now, I need to ask you questions.’ It makes us more limited.”

Moreover, loose company guidelines can impact AI usage. Meta’s AI, for instance, is permitted to engage in sexually provocative conversations, even with underage users. Stricter safeguards to keep minors safe from inappropriate AI interactions must be addressed before chatbots can be called reliable.

Chatbots also pose a privacy risk. Generative AI tools can memorize personal information provided to them, enabling targeted identity theft or fraud. In April, Grok users’ personal data were exposed to search engines, revealing a variety of user queries as well as sensitive information such as mental health or relationships along with full names. The example underscores the dangers of sharing information with an AI chatbot.

“AI is not a therapist,” senior Ponkartihikeyan Saravanan said. “There needs to be improvements, like less hallucinations. Until then, you’re sharing your emotions with something that can’t truly understand how you’re feeling.”

Although AI isn’t yet fit for therapy, it can still be used for a variety of other purposes. Chatbots can help in clear-cut roles like coaching a user through a panic attack and skills coaching. Additionally, they may be a great resource for gathering information, as long as it’s verified.

“Sometimes people give pretty bad advice too,” said Mark Healy, psychology department chair at De Anza College. “AI is good for searching the web in general, and you can get advice from a safe place.”

Ultimately, building a supportive community where students feel comfortable to express themselves rather than feel the need to solely look for a relationship in chatbots is paramount. As AI gains prominence, it’s crucial for students to not only understand its limitations for therapeutic use but to also seek help from qualified professionals.

“It would be really important at the district level to work with the library media teachers to focus on how to help, in this case, issues related to chatbots and seeking mental health support,” Stevens said. “I don’t think that we should fear it and block it. I think we should try to lean in hard to help students access support in a variety of ways whenever possible.”